Simple Tree Simulations

jhmarcus

2019-04-14

Last updated: 2019-05-02

Checks: 5 1

Knit directory: drift-workflow/analysis/

This reproducible R Markdown analysis was created with workflowr (version 1.2.0). The Report tab describes the reproducibility checks that were applied when the results were created. The Past versions tab lists the development history.

The R Markdown file has unstaged changes. To know which version of the R Markdown file created these results, you’ll want to first commit it to the Git repo. If you’re still working on the analysis, you can ignore this warning. When you’re finished, you can run wflow_publish to commit the R Markdown file and build the HTML.

Great job! The global environment was empty. Objects defined in the global environment can affect the analysis in your R Markdown file in unknown ways. For reproduciblity it’s best to always run the code in an empty environment.

The command set.seed(20190211) was run prior to running the code in the R Markdown file. Setting a seed ensures that any results that rely on randomness, e.g. subsampling or permutations, are reproducible.

Great job! Recording the operating system, R version, and package versions is critical for reproducibility.

Nice! There were no cached chunks for this analysis, so you can be confident that you successfully produced the results during this run.

Great! You are using Git for version control. Tracking code development and connecting the code version to the results is critical for reproducibility. The version displayed above was the version of the Git repository at the time these results were generated.

Note that you need to be careful to ensure that all relevant files for the analysis have been committed to Git prior to generating the results (you can use wflow_publish or wflow_git_commit). workflowr only checks the R Markdown file, but you know if there are other scripts or data files that it depends on. Below is the status of the Git repository when the results were generated:

Ignored files:

Ignored: .Rhistory

Ignored: analysis/.Rhistory

Ignored: analysis/flash_cache/

Ignored: data.tar.gz

Ignored: data/datasets/

Ignored: data/raw/

Ignored: output.tar.gz

Ignored: output/

Unstaged changes:

Modified: analysis/simple_tree_simulation.Rmd

Note that any generated files, e.g. HTML, png, CSS, etc., are not included in this status report because it is ok for generated content to have uncommitted changes.

These are the previous versions of the R Markdown and HTML files. If you’ve configured a remote Git repository (see ?wflow_git_remote), click on the hyperlinks in the table below to view them.

| File | Version | Author | Date | Message |

|---|---|---|---|---|

| Rmd | 2fcfa81 | jhmarcus | 2019-05-02 | added flashr sim |

| html | 2fcfa81 | jhmarcus | 2019-05-02 | added flashr sim |

| Rmd | cb90c17 | jhmarcus | 2019-04-26 | added factor numbers to sim |

| html | cb90c17 | jhmarcus | 2019-04-26 | added factor numbers to sim |

| Rmd | e33ede5 | jhmarcus | 2019-04-24 | updated pop names in badmixture |

| Rmd | e32adcc | jhmarcus | 2019-04-19 | init badmixture sim |

| Rmd | 146bedf | jhmarcus | 2019-04-17 | added structure like plots |

| html | 146bedf | jhmarcus | 2019-04-17 | added structure like plots |

| Rmd | 1a21d43 | jhmarcus | 2019-04-17 | updated simple tree doc |

| html | 1a21d43 | jhmarcus | 2019-04-17 | updated simple tree doc |

| Rmd | 0a3374d | jhmarcus | 2019-04-15 | added simple sim |

| html | 0a3374d | jhmarcus | 2019-04-15 | added simple sim |

Introduction

Here I explore FLASH applied to simulations under a simple population tree, as described in Pickrell et al 2012. Specifically I use a multivariate normal approximation to allele frequencies under a fixed tree and generate genotype data given these allele frequencies. See Figure 1 from Pickrell and Pritchard 2012 that shows the parameterization of the tree:

Import

Here I import the some required packages:

library(ggplot2)

library(dplyr)

library(tidyr)

library(flashr)

library(flashier)

library(alstructure)

source("../code/nmf.R")

source("../code/viz.R")Functions

Here are a couple functions to help with the simulations and plotting:

#' @title Simple Graph Simulation

#'

#' @description Simulates genotypes under a simple population

#' tree as described in Pickrell and Pritchard 2012:

#'

#' https://journals.plos.org/plosgenetics/article?id=10.1371/journal.pgen.1002967

#'

#' @param n_per_pop number of individuals per population

#' @param p number of SNPs

#' @param w admixture weight from population 2 --> 3

#' @param c1 branch length 1

#' @param c2 branch length 2

#' @param c3 branch length 3

#' @param c4 branch length 4

#' @param c5 branch length 5

#' @param c6 branch length 6

#' @param c7 branch length 7

#' @param mu_a mean allele frequency of the ancestral population

#' @sigma_e std. deviation of the allele frequency

#' in the ancestral population

#'

#' @return list of matrix genotypes and allele frequencies

#' allele frequencies in the ancestral population

#'

simple_graph_simulation = function(n_per_pop=10,

p=1000,

w=0.0,

c1=.1,

c2=.1,

c3=.1,

c4=.1,

c5=.05,

c6=.1,

c7=.05,

mu_a=.5,

sigma_e=.05){

# number of populations

n_pops = 4

# simulate ancestral allele freqeuncy

p_a = mu_a + rnorm(p, 0, sigma_e)

# ancestral variance

sigma_a = p_a * (1.0 - p_a)

# covariance matrix specified by the tree

V = matrix(NA, nrow=4, ncol=4)

V[1, 1] = c2 + c6

V[2, 1] = V[1, 2] = c2

V[2, 2] = c2 + c5 + c7

V[3, 1] = V[1, 3] = w * c2

V[3, 2] = V[2, 3] = w * (c2 + c5)

V[3, 3] = (w^2 * (c2 + c5)) + ((1 - w)^2 * (c1 + c3))

V[4, 1] = V[1, 4] = 0.0

V[4, 2] = V[2, 4] = 0.0

V[4, 3] = V[3, 4] = (1.0 - w) * c1

V[4, 4] = c1 + c4

# simulate allele frequencies

P = matrix(NA, nrow=p, ncol=n_pops)

for(j in 1:p){

# simulate from truncated multivariate normal

P[j, ] = tmvtnorm::rtmvnorm(1, rep(p_a[j], n_pops), sigma_a[j] * V,

lower=rep(1e-4, n_pops),

upper=rep(1.0-1e-4, n_pops)

)

}

# simulate genotypes

Y = matrix(rbinom(n_per_pop * p, 2, P[,1]), nrow=p, ncol=n_per_pop)

for(i in 2:n_pops){

Y_i = matrix(rbinom(n_per_pop * p, 2, P[,i]), nrow=p, ncol=n_per_pop)

Y = cbind(Y, Y_i)

}

return(list(Y=t(Y), P=t(P), p_a=p_a))

}

plot_flash_loadings = function(flash_fit, n_per_pop){

l_df = as.data.frame(flash_fit$loadings$normalized.loadings[[1]])

colnames(l_df) = 1:ncol(l_df)

l_df$ID = 1:nrow(l_df)

l_df$pop = c(rep("Pop1", n_per_pop), rep("Pop2", n_per_pop),

rep("Pop3", n_per_pop), rep("Pop4", n_per_pop))

gath_l_df = l_df %>% gather(K, value, -ID, -pop) %>% filter(K != 1)

p1 = ggplot(gath_l_df, aes(x=ID, y=value, color=pop)) +

geom_point() +

facet_wrap(K~., scale="free") +

theme_bw()

p2 = structure_plot(gath_l_df,

colset="Set3",

facet_grp="pop",

facet_levels=paste0("Pop", 1:4),

keep_leg=TRUE,

fact_type="nonnegative")

return(list(p1=p1, p2=p2))

}Tree Simulation

Here I simulate under a tree model by setting the admixture weight \(w=0.0\). I simulate 10 individuals per population and 10,000 SNPs. I also made the branch lengths of the internal branches to be 5 times longer then the external branches.

set.seed(1990)

# number of individuals per pop

n_per_pop = 20

# set w = 0.0 to just simulate from a tree

sim = simple_graph_simulation(w=0.0, p=10000, c1=.5, c2=.5, n_per_pop=n_per_pop)

# data matrix

Y = sim$Y

# centered data matrix

Y_c = scale(Y, center=TRUE, scale=FALSE)

# centered scaled data matrix

Y_cs = scale(Y, center=TRUE, scale=TRUE)

# number of individuals

n = nrow(Y)

# number of SNPs

p = ncol(Y)

# number of factors

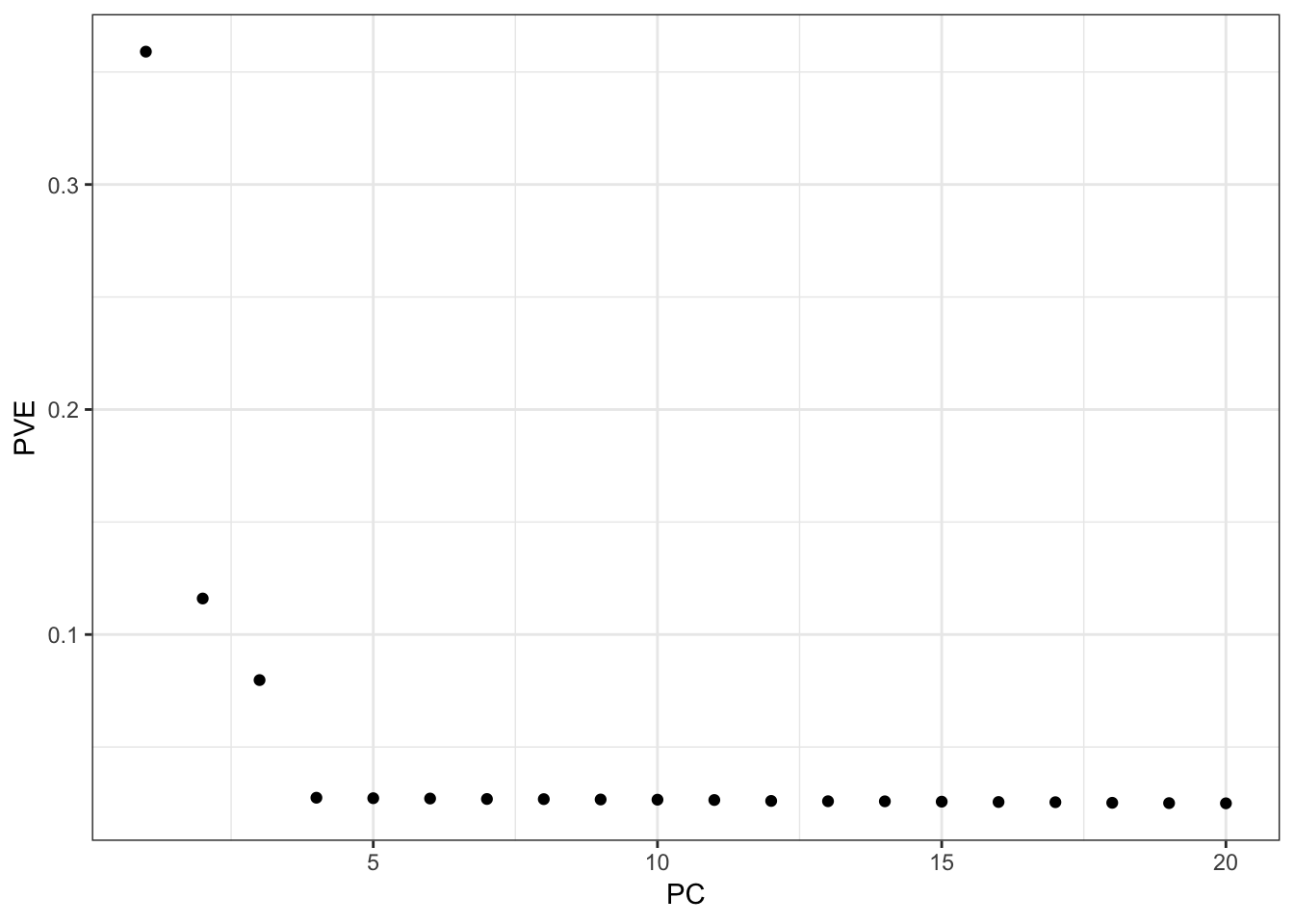

K = 20PCA

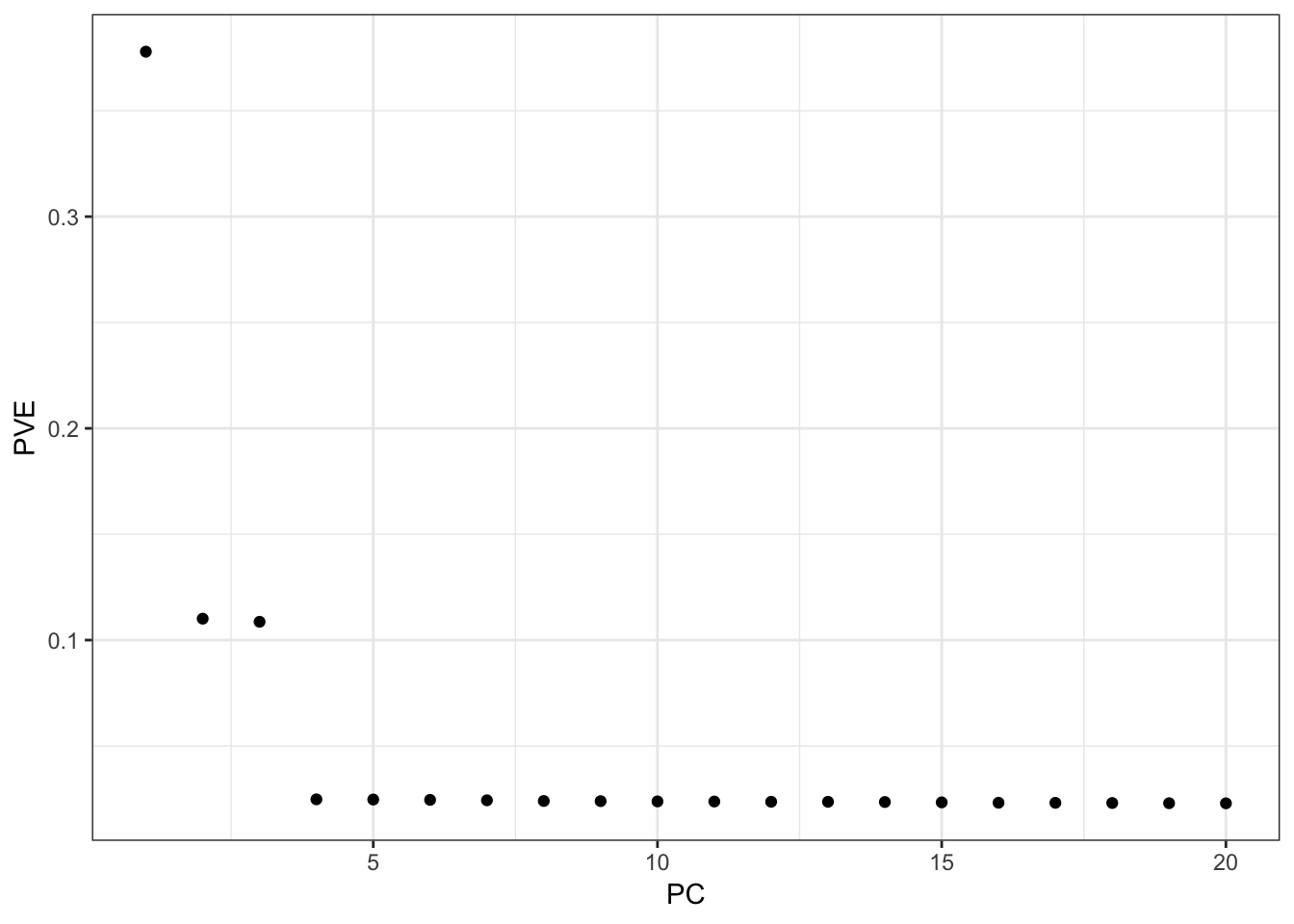

Here I apply PCA to the centered and scaled genotype matrix:

svd_fit = lfa:::trunc.svd(Y_cs, K)

lamb = svd_fit$d^2

p = qplot(1:K, lamb / sum(lamb)) +

xlab("PC") +

ylab("PVE") +

theme_bw()

p

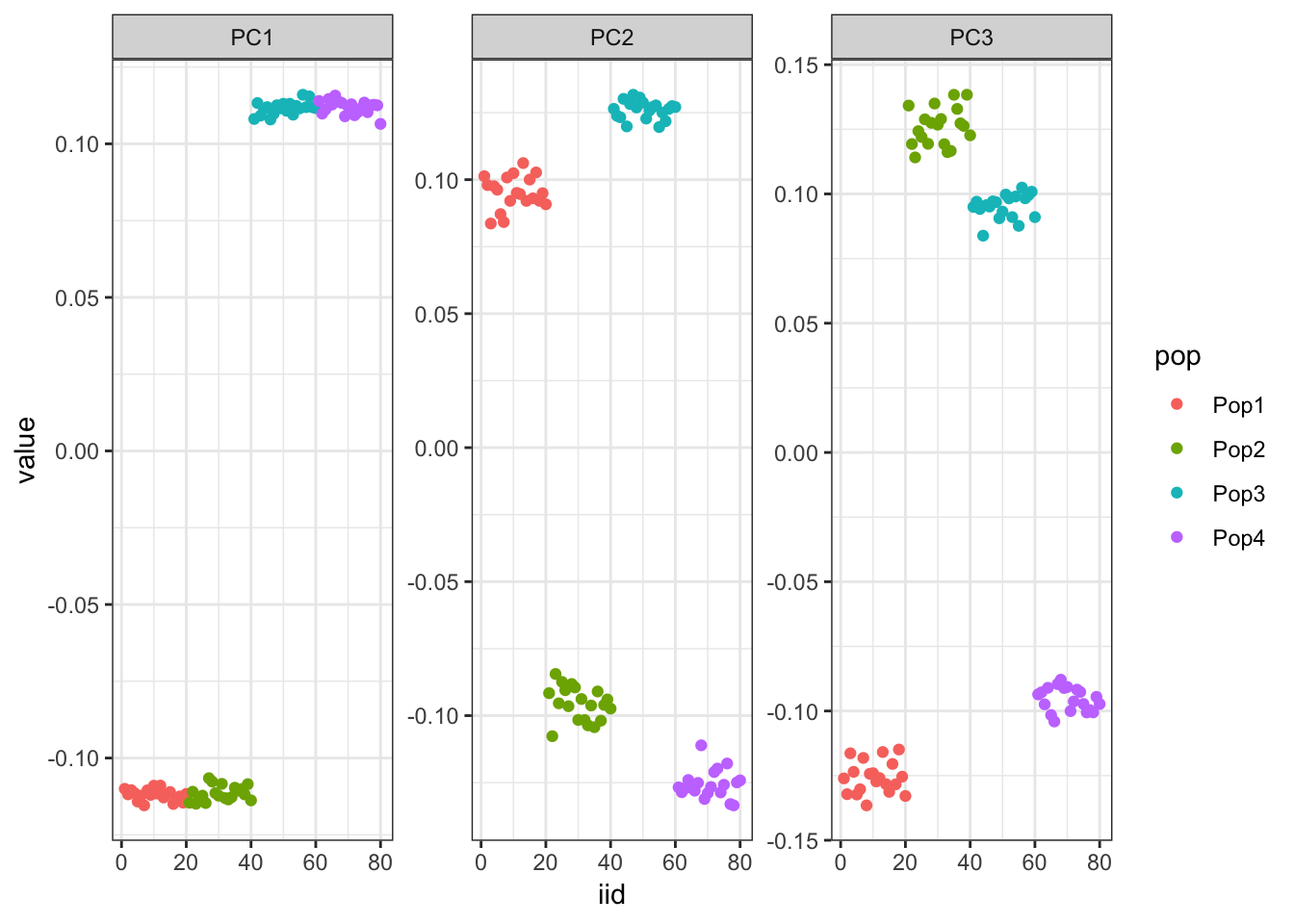

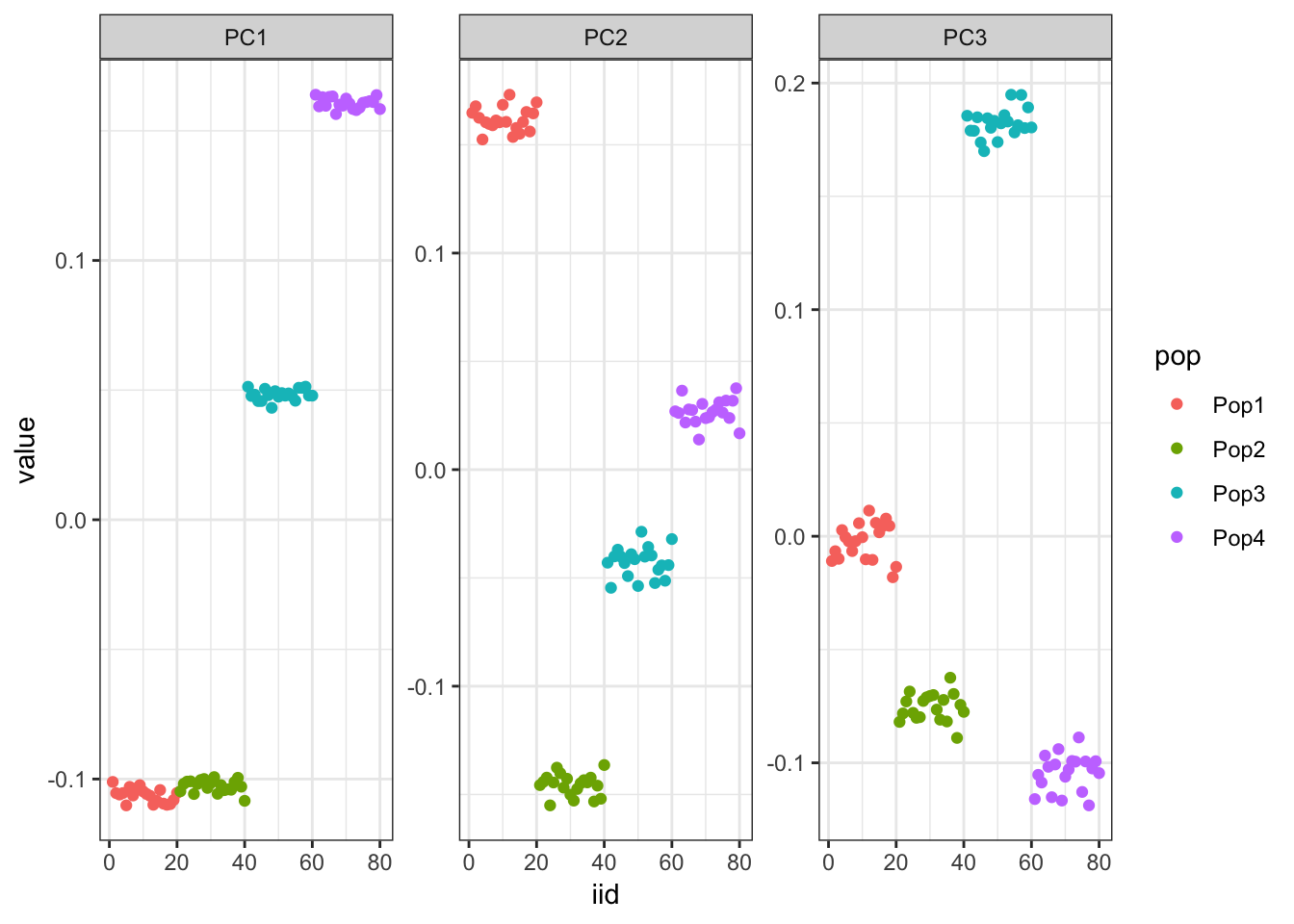

From the PVE plot we can see there is a large drop off after the first 3 PCs so lets just visualize them:

l_df = data.frame(svd_fit$u[,1:3])

colnames(l_df) = paste0("PC", 1:3)

l_df$iid = 1:n

l_df$pop = c(rep("Pop1", n_per_pop), rep("Pop2", n_per_pop),

rep("Pop3", n_per_pop), rep("Pop4", n_per_pop))

gath_l_df = l_df %>% gather(PC, value, -iid, -pop)

p = ggplot(gath_l_df, aes(x=iid, y=value, color=pop)) +

geom_point() +

facet_wrap(PC~., scale="free") +

theme_bw()

p

It looks like PC1 represents the first split on the tree. PC2 and PC3 represent the subsequent splits.

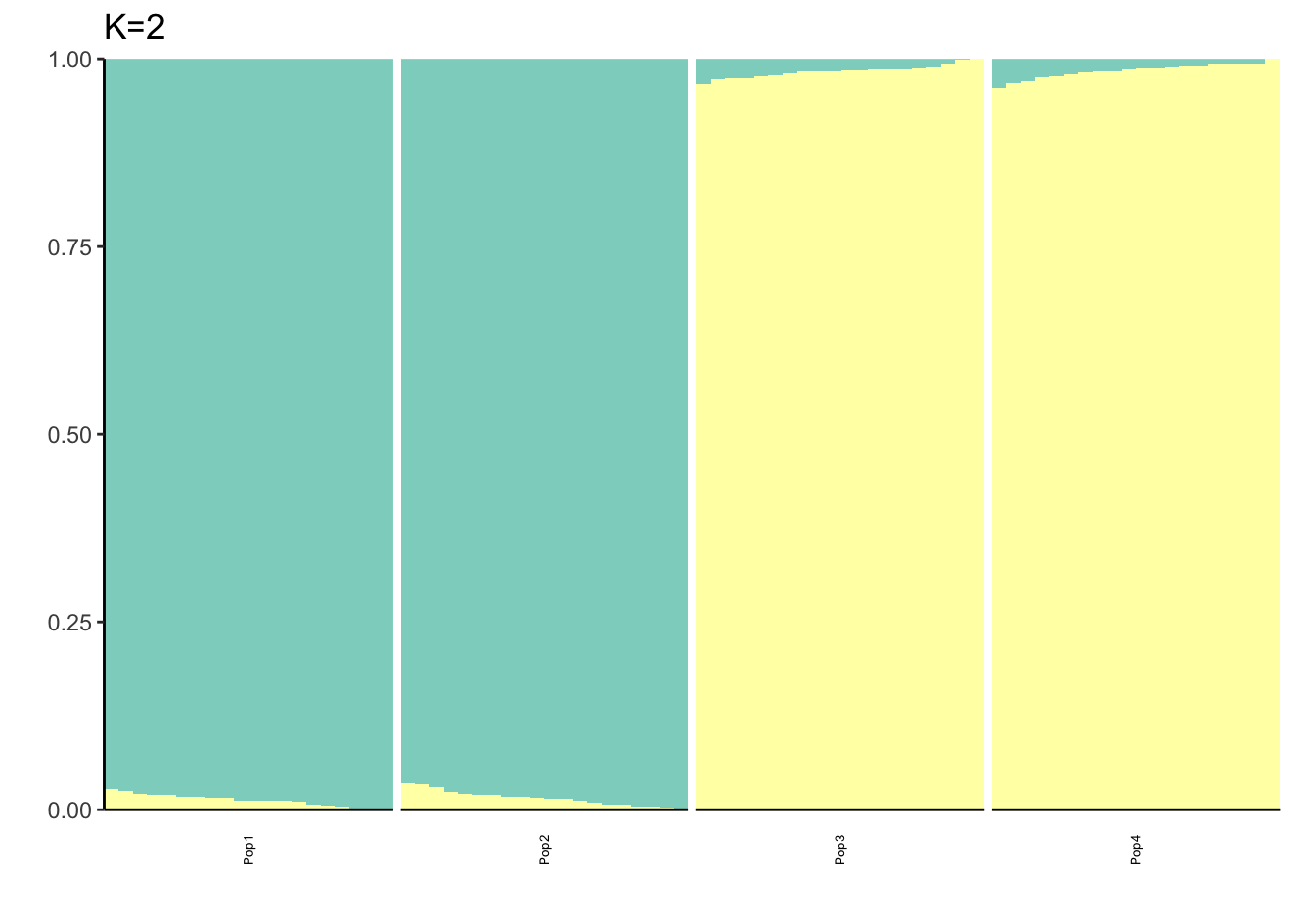

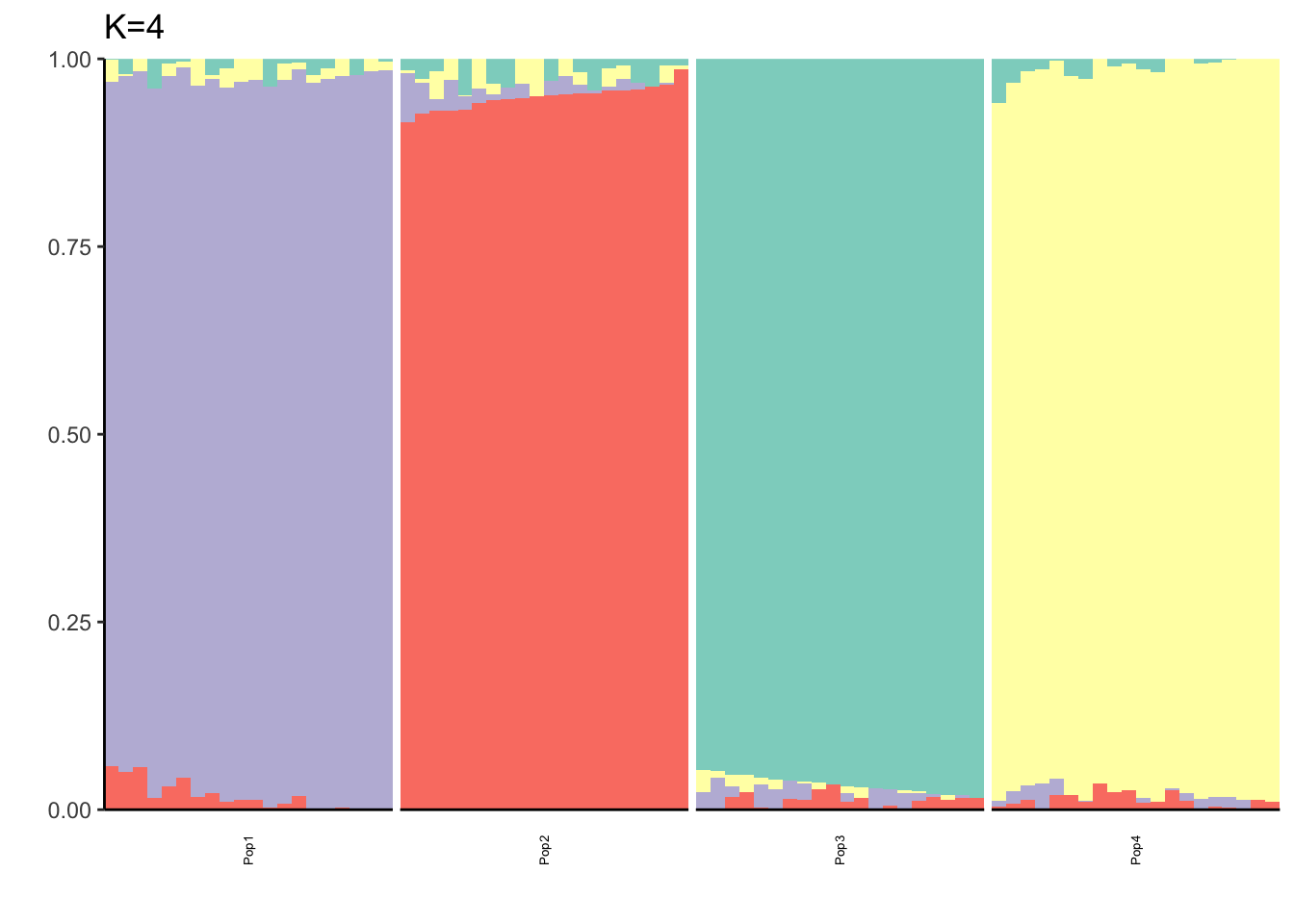

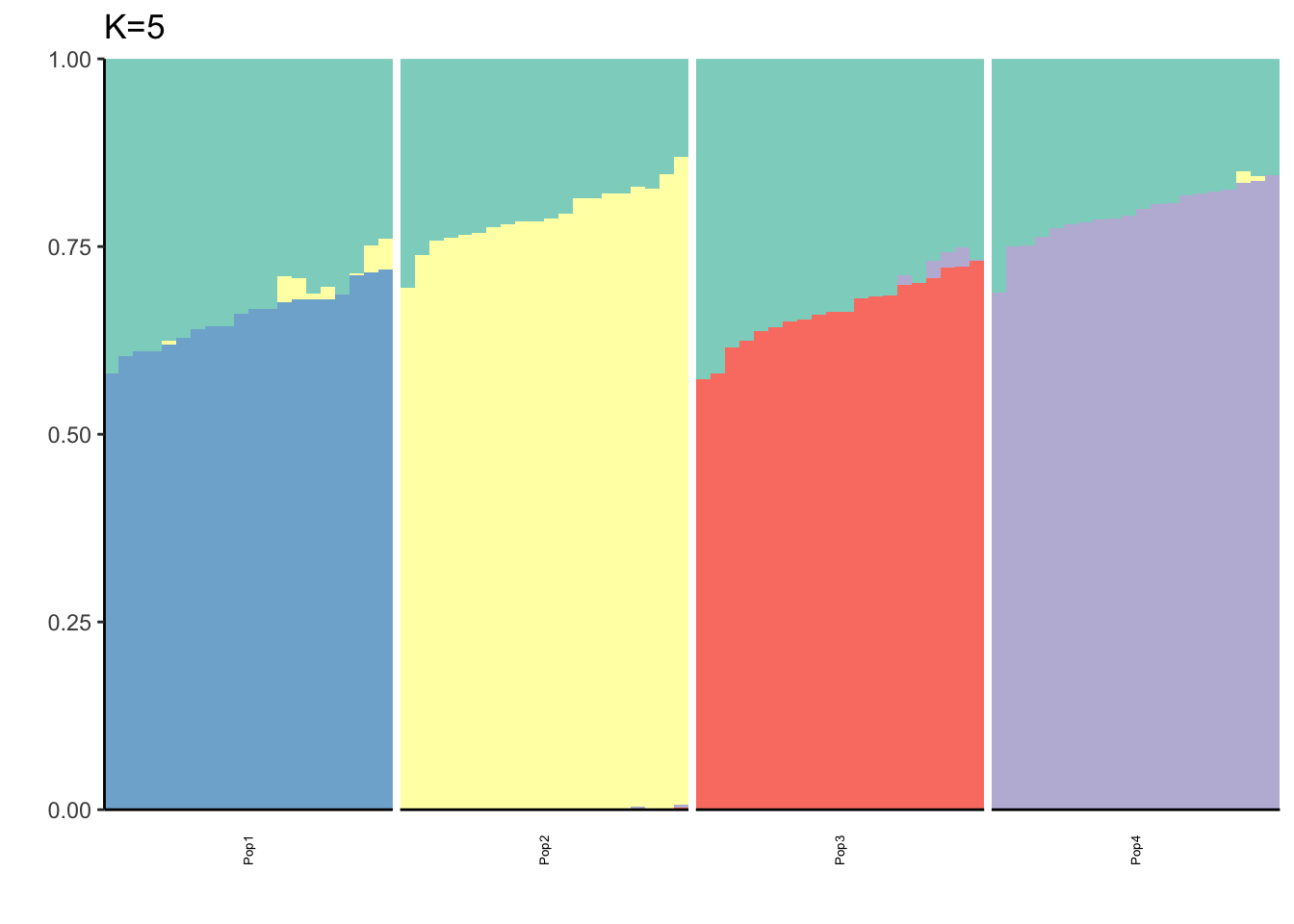

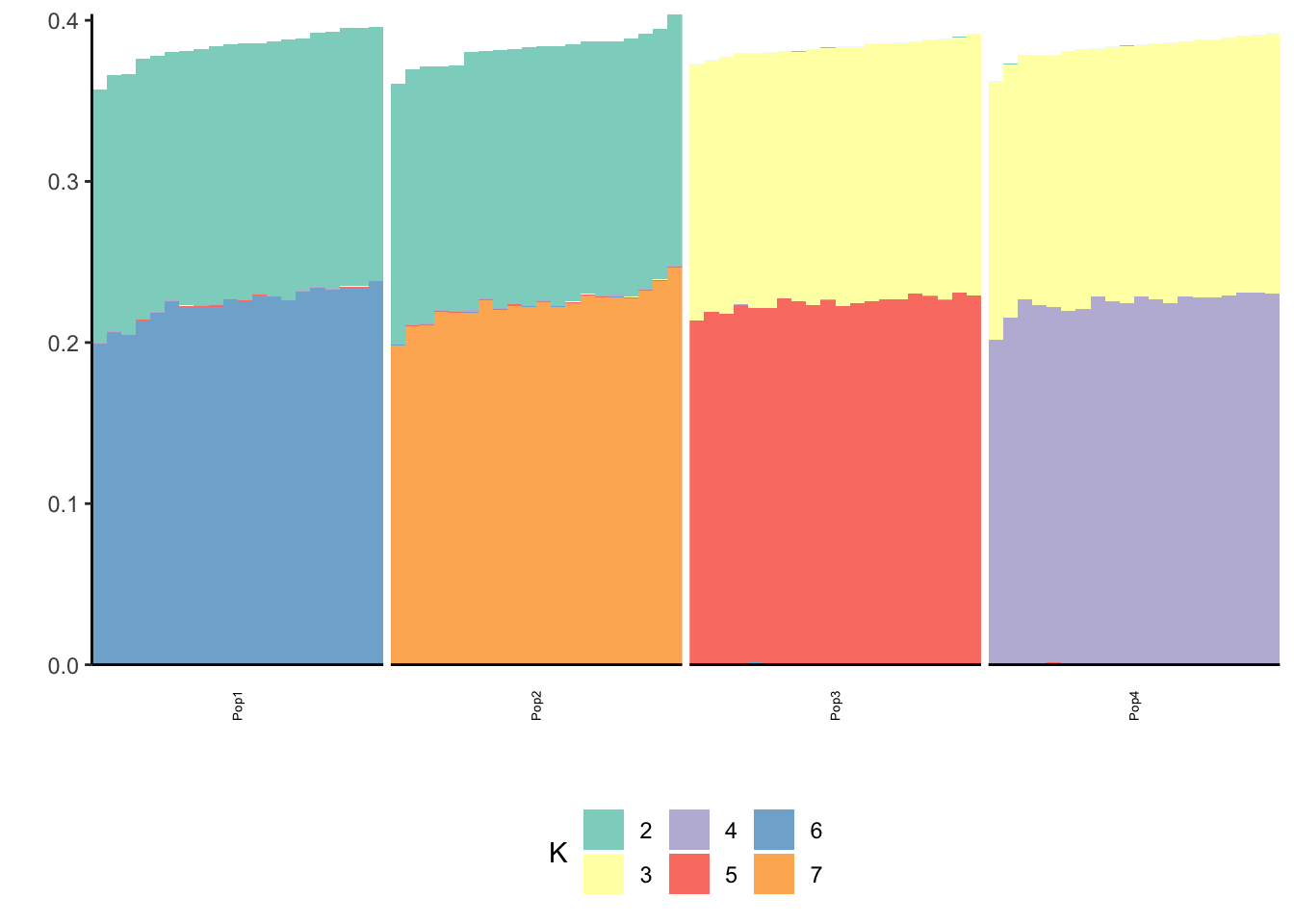

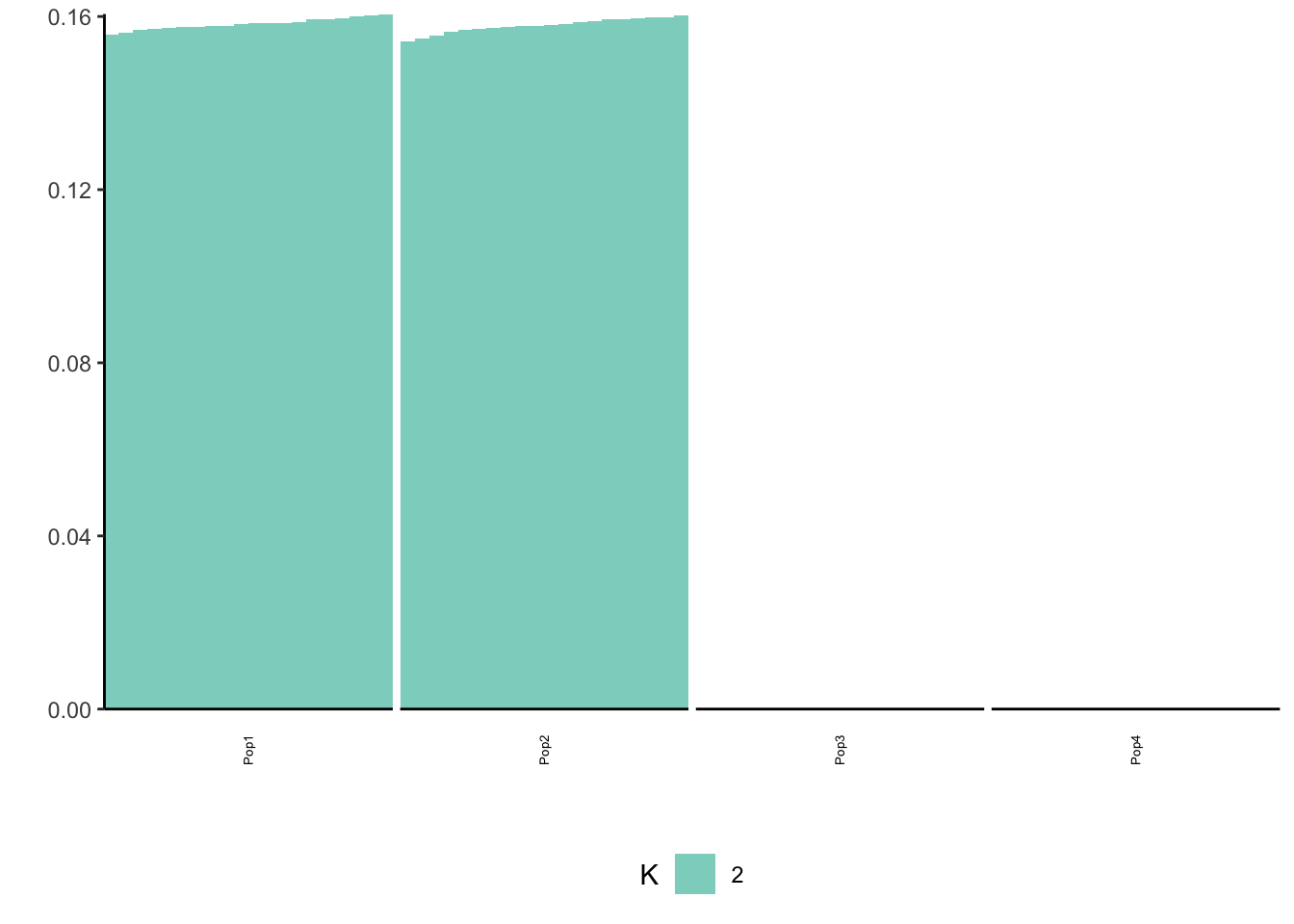

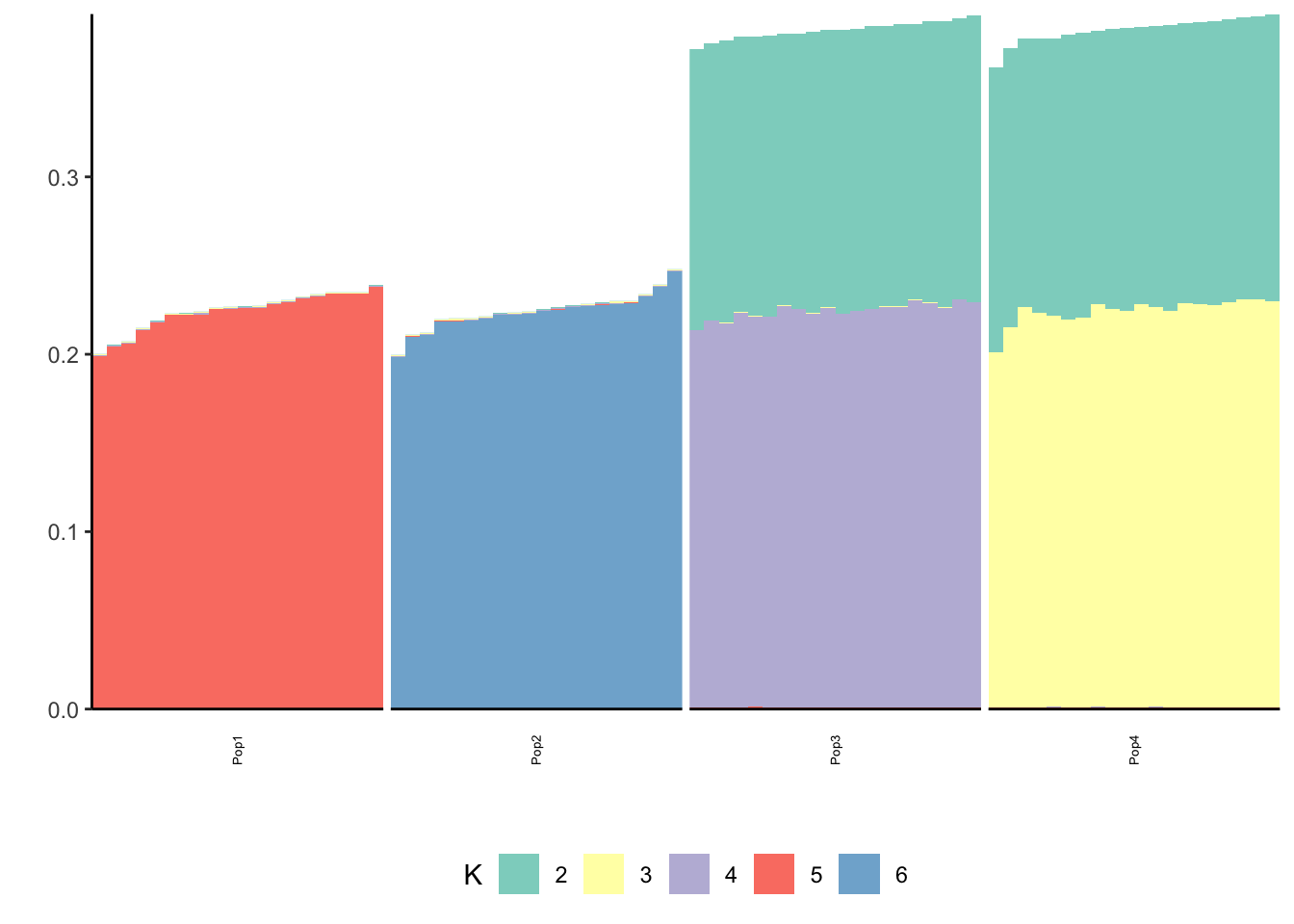

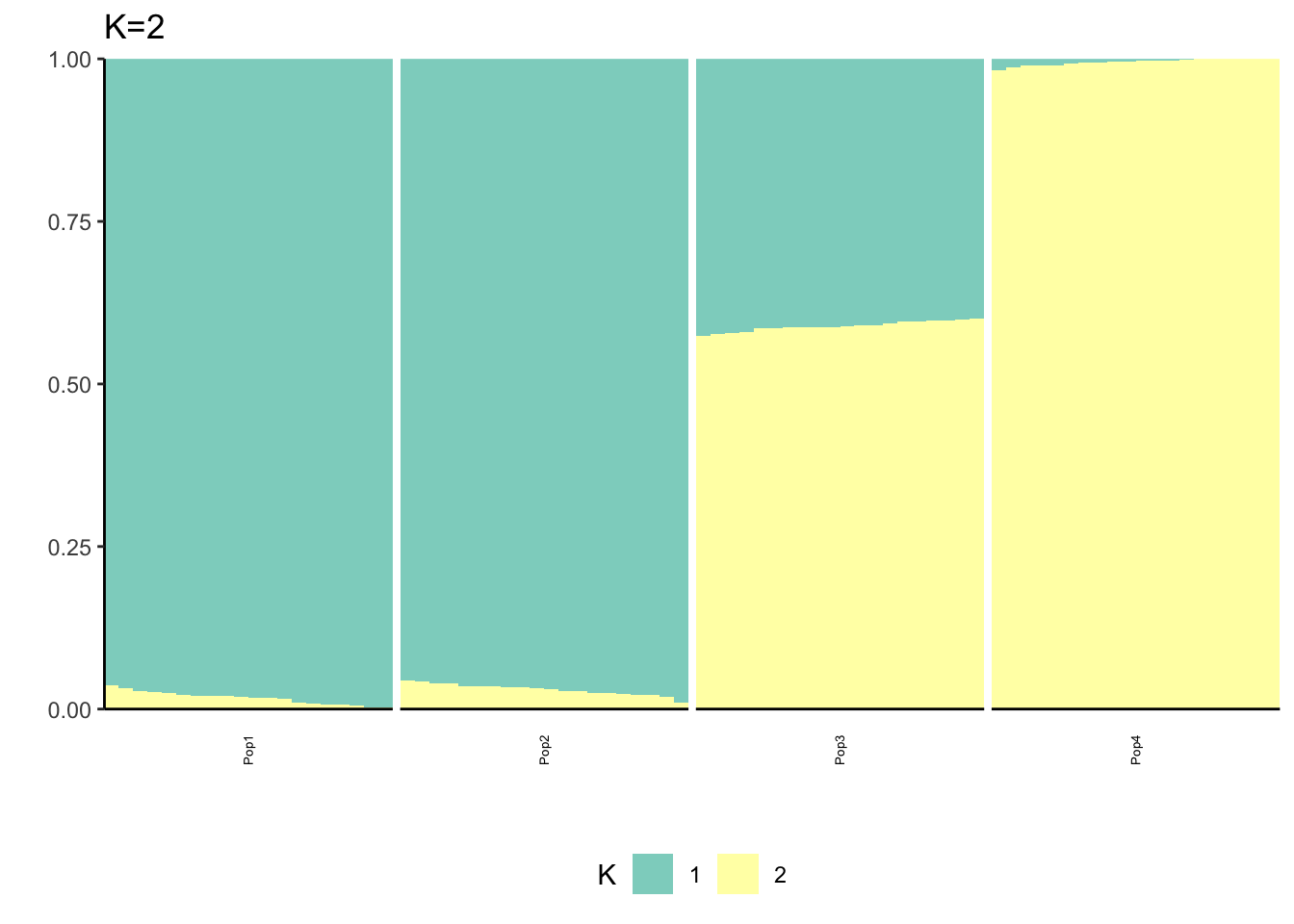

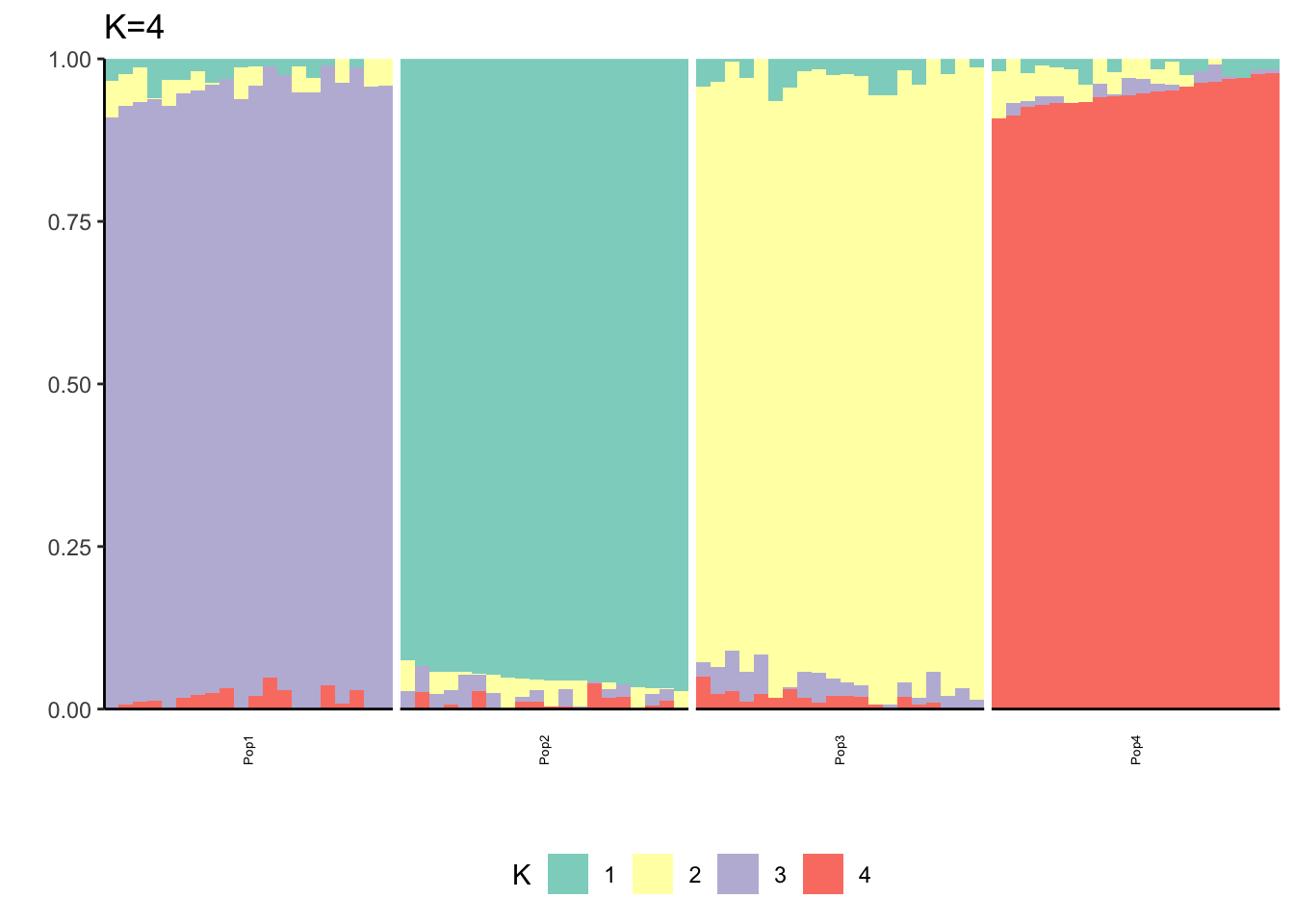

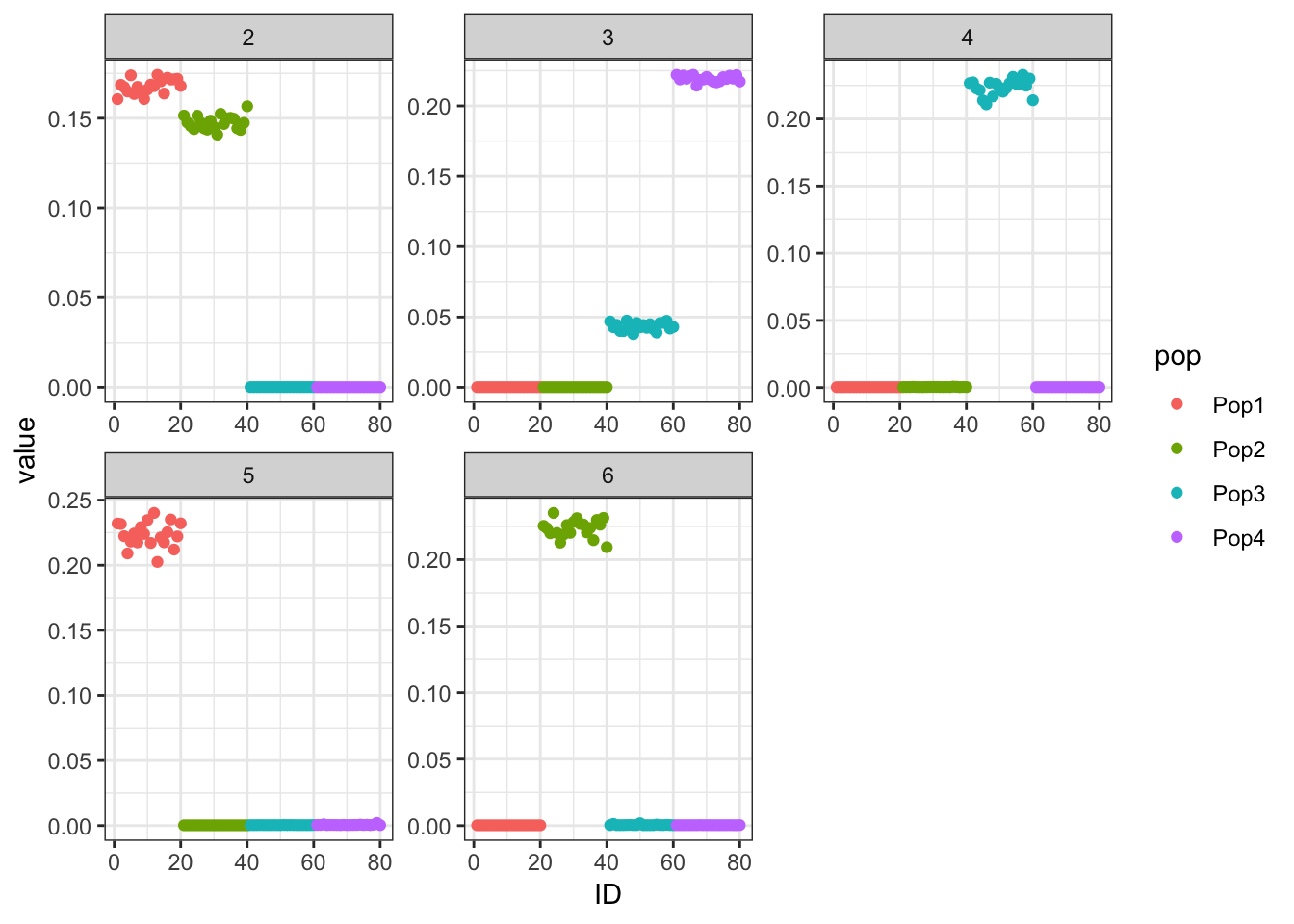

PSD (alstructure)

Here I fit the Pritchard, Stephens, and Donnelly model using alstructure for K=2,…,6:

K = 6

for(k in 2:K){

al_fit = alstructure(t(Y), d_hat = k)

Q = t(al_fit$Q_hat)

l_df = as.data.frame(Q)

colnames(l_df) = 1:k

l_df$ID = 1:n

l_df$pop = c(rep("Pop1", n_per_pop), rep("Pop2", n_per_pop),

rep("Pop3", n_per_pop), rep("Pop4", n_per_pop))

gath_l_df = l_df %>% gather(K, value, -ID, -pop)

p = structure_plot(gath_l_df,

colset="Set3",

facet_grp="pop",

facet_levels=paste0("Pop", 1:4),

fact_type="structure") +

ggtitle(paste0("K=", k))

print(p)

}

It looks like for \(K=2\) we see the deeper split in the tree, \(K=4\) assigns population specific factors, and all the other K runs are less interpretable.

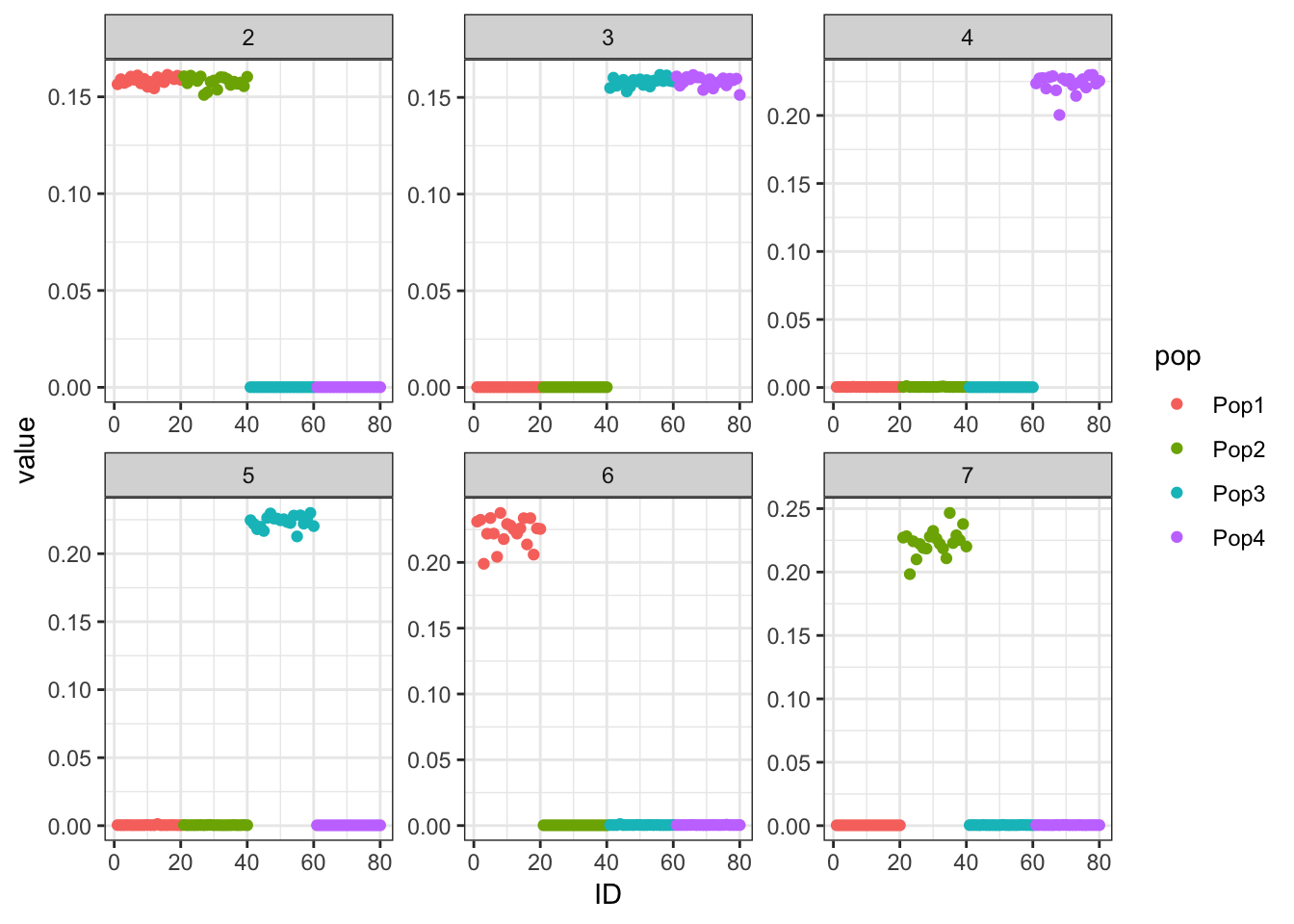

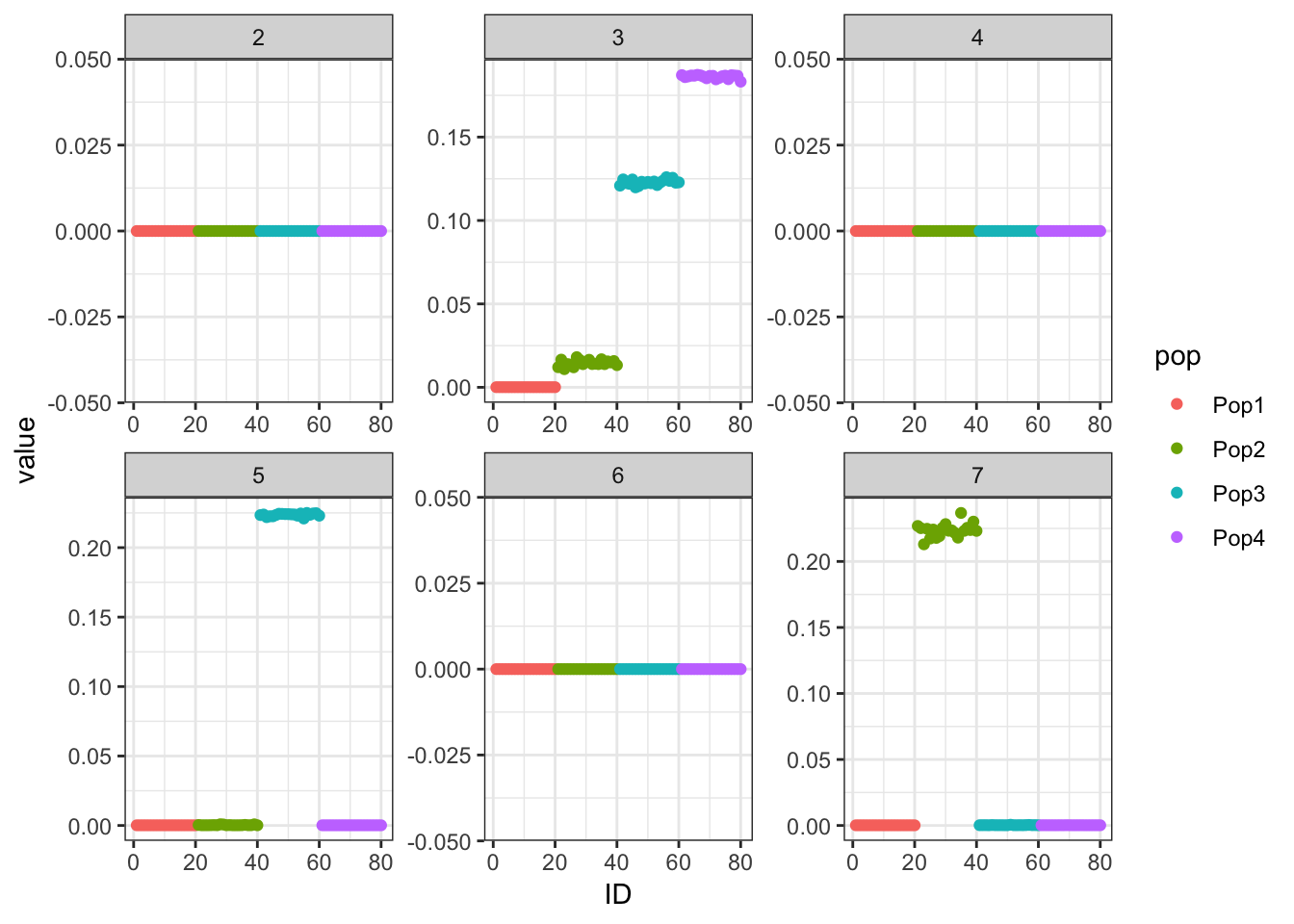

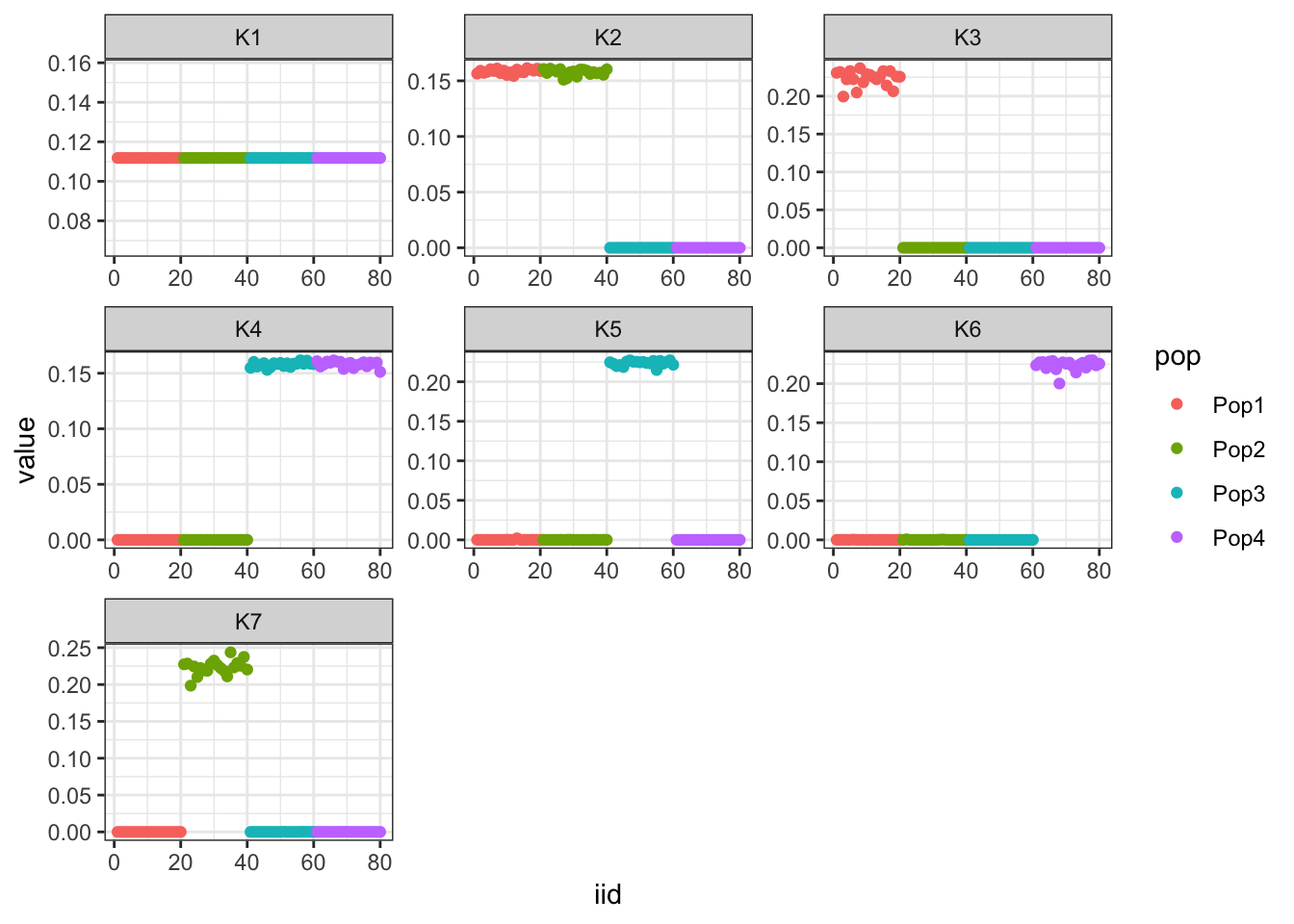

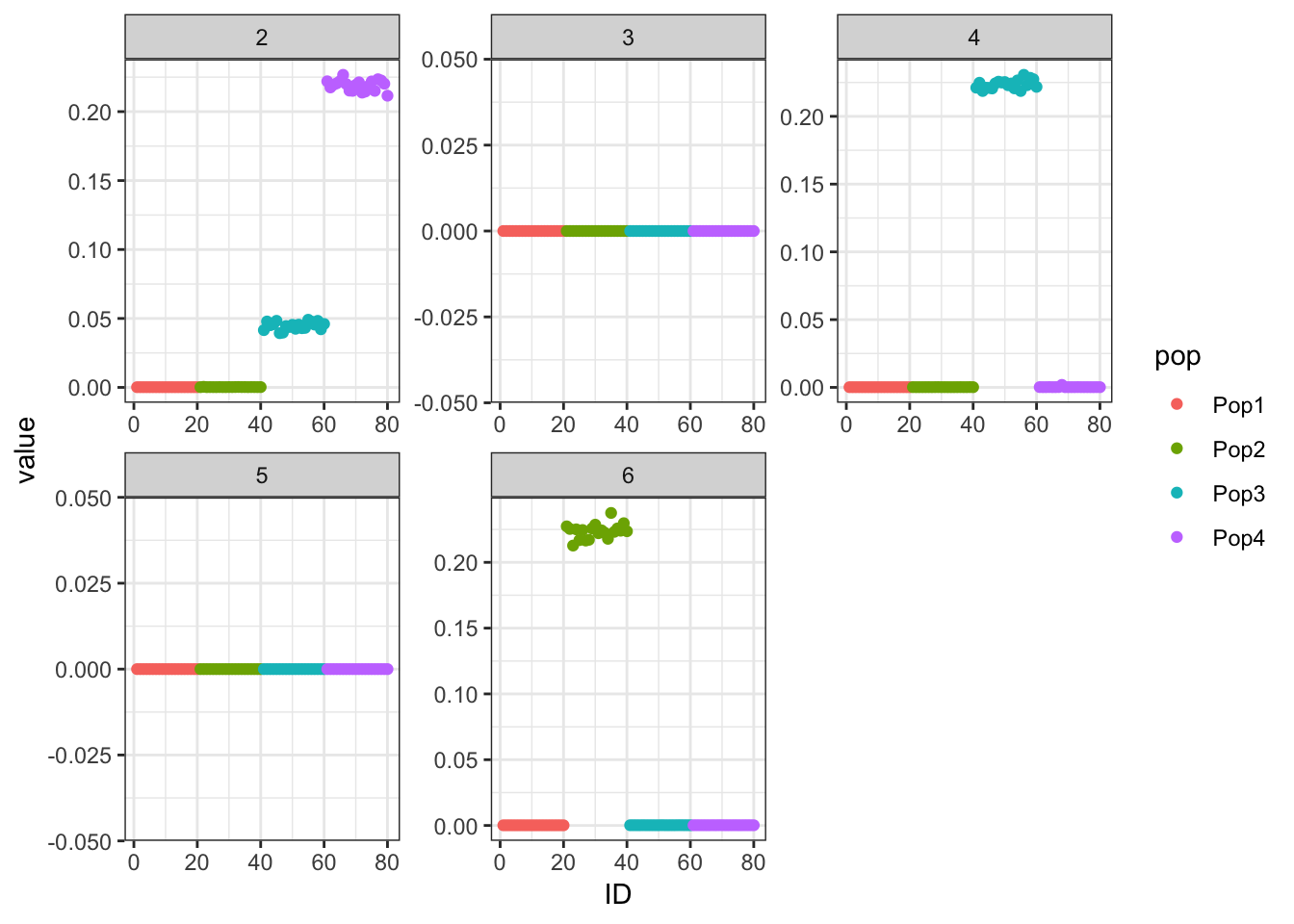

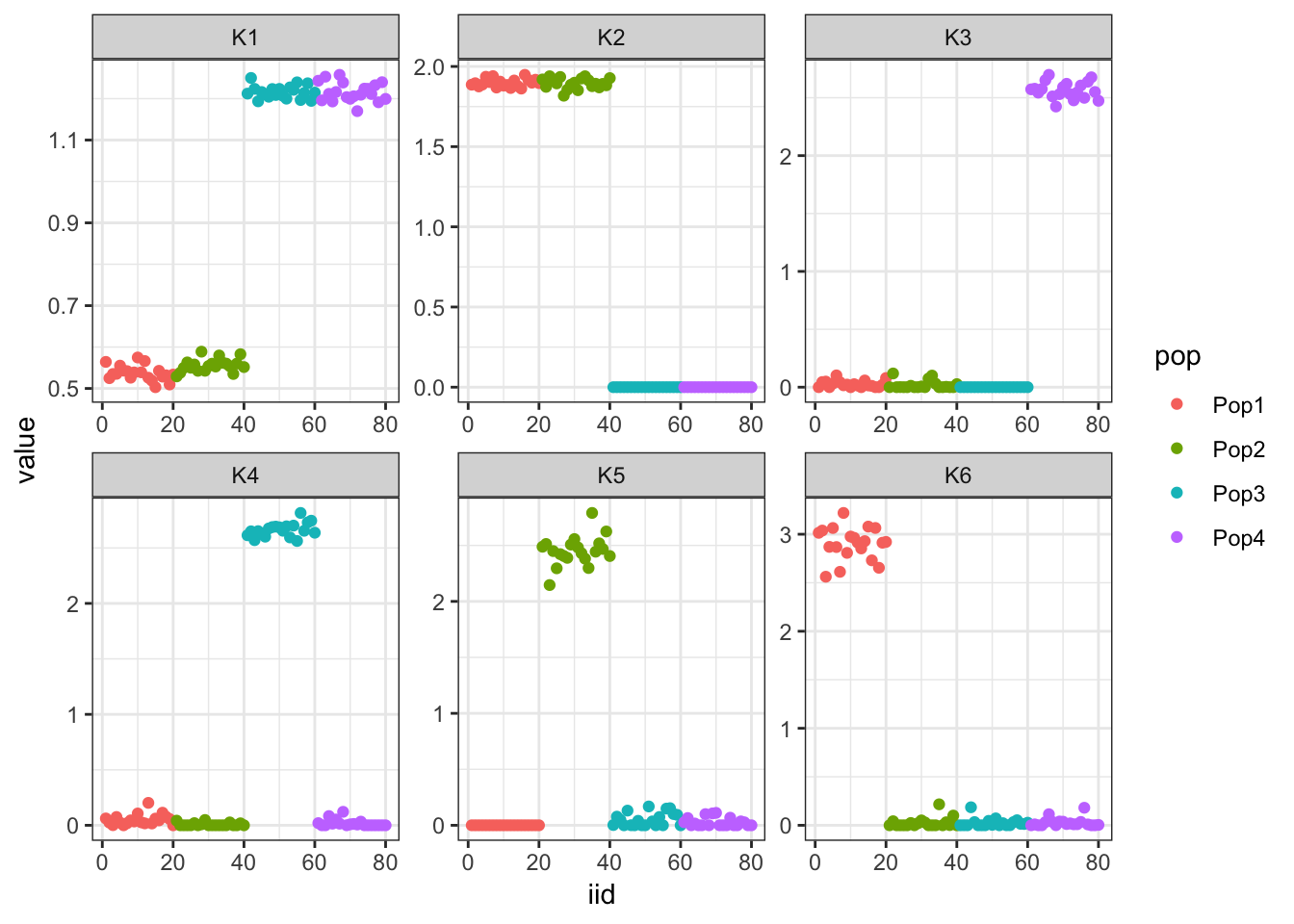

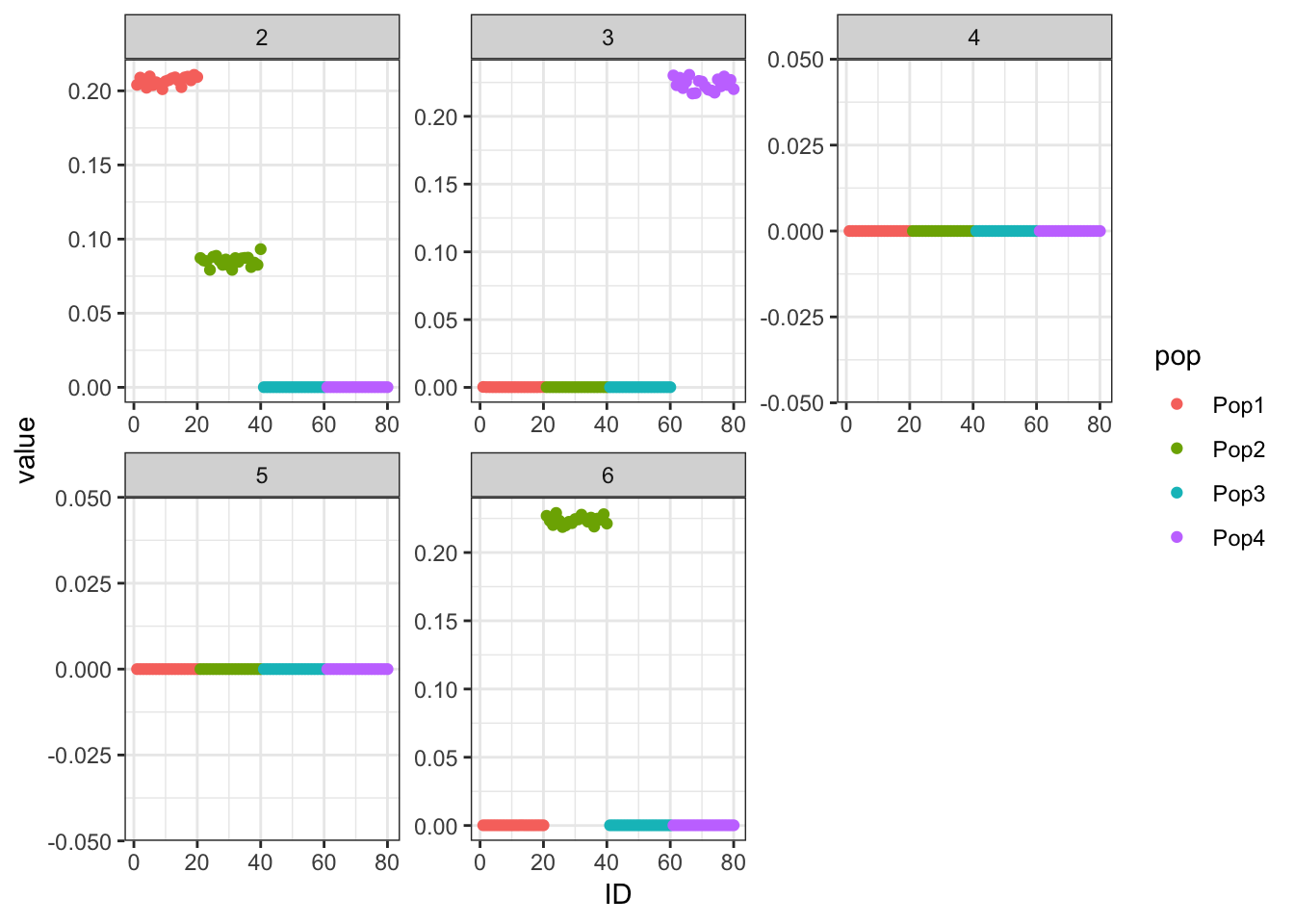

FLASH (Drift)

Here I apply Empirical Bayes matrix factorization with non-negative loadings and unconstrained factors. I fixed the factors to come from a normal prior with mean 0 and variance 1:

Fix Loadings Greedy

Here I fix the first loadings vector to the 1 vector and only run the greedy algorithm:

flash_fit = flashier::flashier(Y,

greedy.Kmax=K,

prior.type=c("nonnegative", "point.normal"),

ebnm.param=list(fixg=TRUE, g=list(pi0 = 0, a=1, mu=0)),

var.type=0,

fix.dim=list(1),

fix.idx=list(1:n),

fix.vals=list(rep(1, n))) Initializing flash object...

Adding factor 1 to flash object...

Adding factor 2 to flash object...

Adding factor 3 to flash object...

Adding factor 4 to flash object...

Adding factor 5 to flash object...

Adding factor 6 to flash object...

Adding factor 7 to flash object...

Nullchecking 6 factors...

Wrapping up...

Done.p_res = plot_flash_loadings(flash_fit, n_per_pop)

print(p_res$p1)

print(p_res$p2)

print(flash_fit$objective)[1] -835444.8Very cool … K2,K3 represent the internal nodes of the tree and K4,K5,K6,K7 represent the leaves i.e. population specific factors.

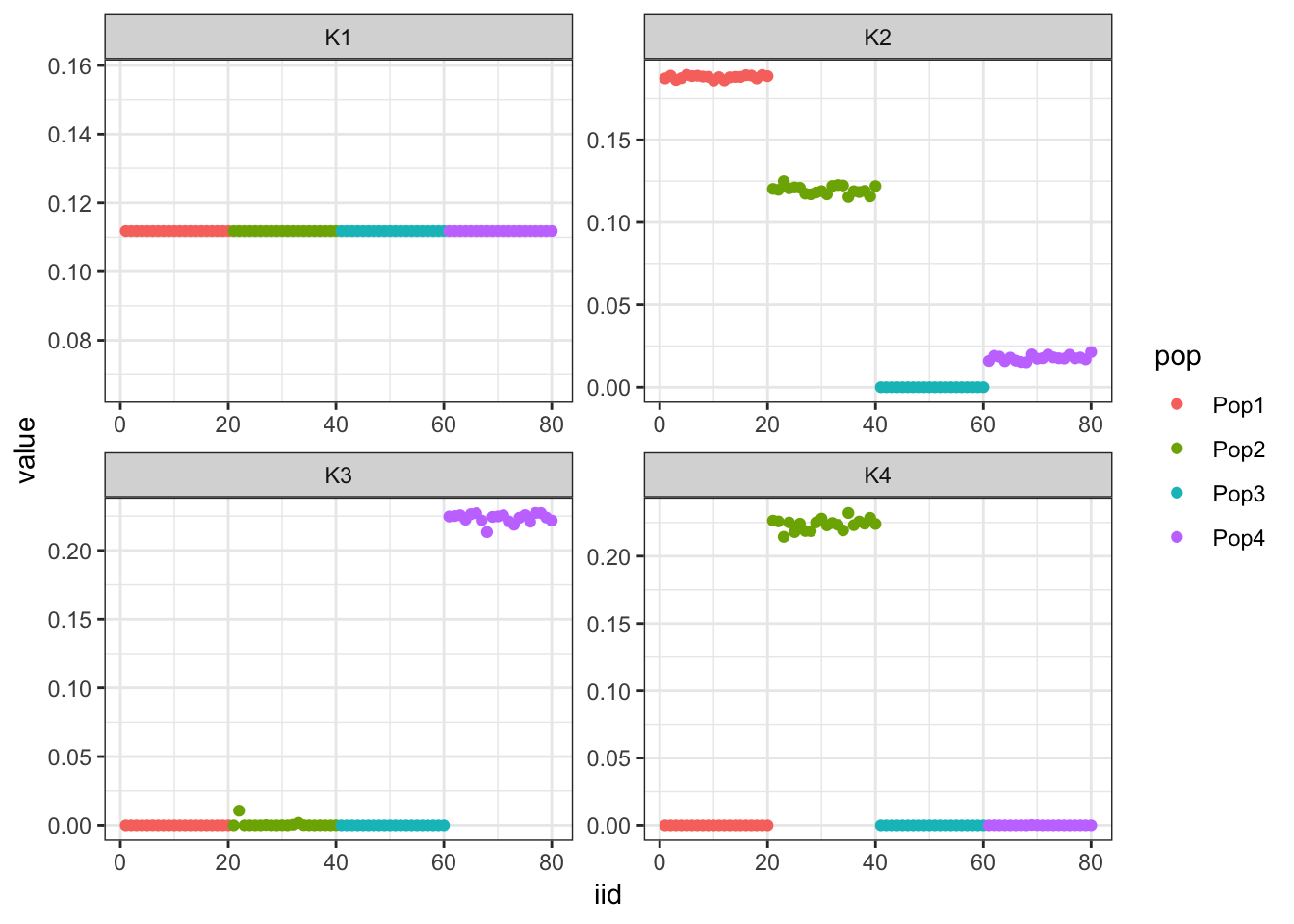

Fix Loadings Final Backfit

Here I fix the first loadings vector to the 1 vector, run the greedy algorithm to pick out \(K\) from the data and then run a final backfit to clean up the greedy solution:

flash_fit = flashier::flashier(Y,

flash.init = flash_fit,

prior.type=c("nonnegative", "point.normal"),

ebnm.param=list(fixg=TRUE, g=list(pi0 = 0, a=1, mu=0)),

var.type=0,

fix.dim=list(1),

fix.idx=list(1:n),

fix.vals=list(rep(1, n)),

backfit="final",

backfit.order="dropout",

backfit.reltol=10)Initializing flash object...

Adding factor 8 to flash object...

Factor doesn't increase objective and won't be added.

Backfitting 7 factors...

Nullchecking 6 factors...

Factor 2 removed, increasing objective by 1.488e+02.

Factor 4 removed, increasing objective by 9.215e-02.

Factor 6 removed, increasing objective by 9.954e-02.

Adding factor 8 to flash object...

Factor doesn't increase objective and won't be added.

Backfitting 7 factors...

Nullchecking 6 factors...

Wrapping up...

Done.p_res = plot_flash_loadings(flash_fit, n_per_pop)

print(p_res$p1)

print(p_res$p2)

print(flash_fit$objective)[1] -818372.4Hmm it seems like the final backfit removes the nice signal we had in the greedy run and it zeros out some of the factors.

Fix Loadings Alternating Backfit

Here I fix the first loadings vector to the 1 vector and run a scheme where backfitting is performed after greedily adding each factor:

flash_fit = flashier::flashier(Y,

greedy.Kmax=1,

prior.type=c("nonnegative", "point.normal"),

ebnm.param=list(fixg=TRUE, g=list(pi0 = 0, a=1, mu=0)),

var.type=0,

fix.dim=list(1),

fix.idx=list(1:n),

fix.vals=list(rep(1, n)),

backfit = "alternating",

backfit.order = "dropout",

backfit.reltol = 10)Initializing flash object...

Adding factor 1 to flash object...

Adding factor 2 to flash object...

Backfitting 2 factors...

Nullchecking 1 factors...

Wrapping up...

Done.p_res = plot_flash_loadings(flash_fit, n_per_pop)

print(p_res$p1)

print(p_res$p2)

print(flash_fit$objective)[1] -837946.4Again this leads to an odd solution with only two population specific factors and its not obvious what K2 represents.

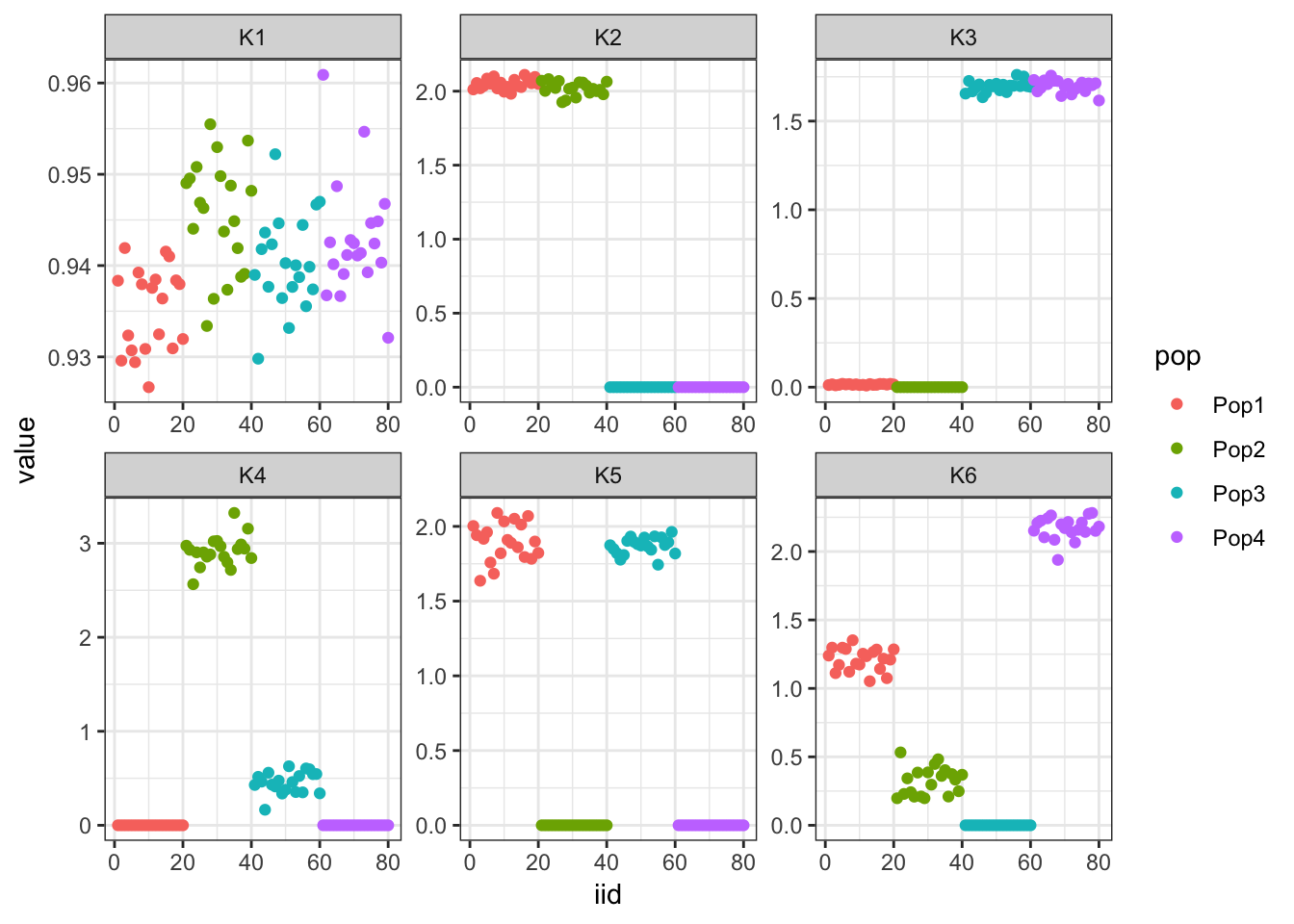

Fix Loadings Greedy FLASHR

Here I use a different implementation of FLASH in FLASHR for the greedy run

data = flash_set_data(Y)

f = flash_add_fixed_loadings(data, LL=cbind(rep(1, n)), backfit=TRUE, verbose=FALSE)

f = flash(data,

f_init=f,

Kmax=10,

var_type="constant",

ebnm_fn=list(l="ebnm_ash", f="ebnm_pn"),

ebnm_param=list(l=list(mixcompdist="+uniform", method="fdr"),

f=list(fixg=TRUE, g=list(pi0 = 0, a=1, mu=0))),

backfit=FALSE)

l_df = data.frame(f$ldf$l)

colnames(l_df) = paste0("K", 1:ncol(l_df))

l_df$iid = 1:n

l_df$pop = c(rep("Pop1", n_per_pop), rep("Pop2", n_per_pop),

rep("Pop3", n_per_pop), rep("Pop4", n_per_pop))

gath_l_df = l_df %>% gather(K, value, -iid, -pop)

p = ggplot(gath_l_df, aes(x=iid, y=value, color=pop)) +

geom_point() +

facet_wrap(K~., scale="free") +

theme_bw()

p

This looks very similar ot the greedy solution of flashier

Fix Loadings Final Backfit FLASHR

Here I use a different implementation of FLASH in FLASHR for a final backfit

f = flash_add_fixed_loadings(data, LL=cbind(rep(1, n)), backfit=TRUE, verbose=FALSE)

f = flash(data,

f_init=f,

Kmax=10,

var_type="constant",

ebnm_fn=list(l="ebnm_ash", f="ebnm_pn"),

ebnm_param=list(l=list(mixcompdist="+uniform", method="fdr"),

f=list(fixg=TRUE, g=list(pi0 = 0, a=1, mu=0))),

backfit=TRUE)

l_df = data.frame(f$ldf$l)

colnames(l_df) = paste0("K", 1:ncol(l_df))

l_df$iid = 1:n

l_df$pop = c(rep("Pop1", n_per_pop), rep("Pop2", n_per_pop),

rep("Pop3", n_per_pop), rep("Pop4", n_per_pop))

gath_l_df = l_df %>% gather(K, value, -iid, -pop)

p = ggplot(gath_l_df, aes(x=iid, y=value, color=pop)) +

geom_point() +

facet_wrap(K~., scale="free") +

theme_bw()

p

This looks roughly similar to the flashier solution expect the populations are switched.

Intialize with Tree

In progress

Mean Center Greedy

Here I don’t fix the first loadings vector and just mean center the data matrix before running greedy FLASH (Drift):

flash_fit = flashier::flashier(Y_c,

greedy.Kmax=K,

prior.type=c("nonnegative", "point.normal"),

ebnm.param=list(fixg=TRUE, g=list(pi0 = 0, a=1, mu=0)),

var.type=0)Initializing flash object...

Adding factor 1 to flash object...

Adding factor 2 to flash object...

Adding factor 3 to flash object...

Adding factor 4 to flash object...

Adding factor 5 to flash object...

Adding factor 6 to flash object...

Nullchecking 6 factors...

Wrapping up...

Done.p_res = plot_flash_loadings(flash_fit, n_per_pop)

print(p_res$p1)

print(p_res$p2)

print(flash_fit$objective)[1] -803237.5Mean centering gives a similar solution to fixing the first factor.

Mean Center Final Backfit

Here I don’t fix the first loadings vector and just mean center the data matrix before running greedy FLASH (Drift) with a final backfit:

flash_fit = flashier::flashier(Y_c,

flash.init = flash_fit,

prior.type=c("nonnegative", "point.normal"),

ebnm.param=list(fixg=TRUE, g=list(pi0 = 0, a=1, mu=0)),

var.type=0,

fix.dim=list(1),

fix.idx=list(1:n),

fix.vals=list(rep(1, n)),

backfit="final",

backfit.order="dropout",

backfit.reltol=10)Initializing flash object...

Adding factor 7 to flash object...

Factor doesn't increase objective and won't be added.

Backfitting 6 factors...

Nullchecking 5 factors...

Factor 3 removed, increasing objective by 9.357e-02.

Factor 5 removed, increasing objective by 1.009e-01.

Adding factor 7 to flash object...

Factor doesn't increase objective and won't be added.

Backfitting 6 factors...

Nullchecking 5 factors...

Wrapping up...

Done.p_res = plot_flash_loadings(flash_fit, n_per_pop)

print(p_res$p1)

print(p_res$p2)

print(flash_fit$objective)[1] -795175.2We seem to be missing a few of the population specific factors?

Mean Center Alternating Backfit

Here I don’t fix the first loadings vector and just mean center the data matrix before running greedy FLASH (Drift) with alternating backfits:

flash_fit = flashier::flashier(Y,

greedy.Kmax=K,

prior.type=c("nonnegative", "point.normal"),

ebnm.param=list(fixg=TRUE, g=list(pi0 = 0, a=1, mu=0)),

var.type=0,

fix.dim=list(1),

fix.idx=list(1:n),

fix.vals=list(rep(1, n)),

backfit = "alternating",

backfit.order = "dropout",

backfit.reltol = 10)Initializing flash object...

Adding factor 1 to flash object...

Adding factor 2 to flash object...

Backfitting 2 factors...

Adding factor 3 to flash object...

Backfitting 3 factors...

Adding factor 4 to flash object...

Backfitting 4 factors...

Adding factor 5 to flash object...

Factor doesn't increase objective and won't be added.

Nullchecking 3 factors...

Wrapping up...

Done.p_res = plot_flash_loadings(flash_fit, n_per_pop)

print(p_res$p1)

print(p_res$p2)

print(flash_fit$objective)[1] -818378.6Again we see only population specific factors but not shared factors.

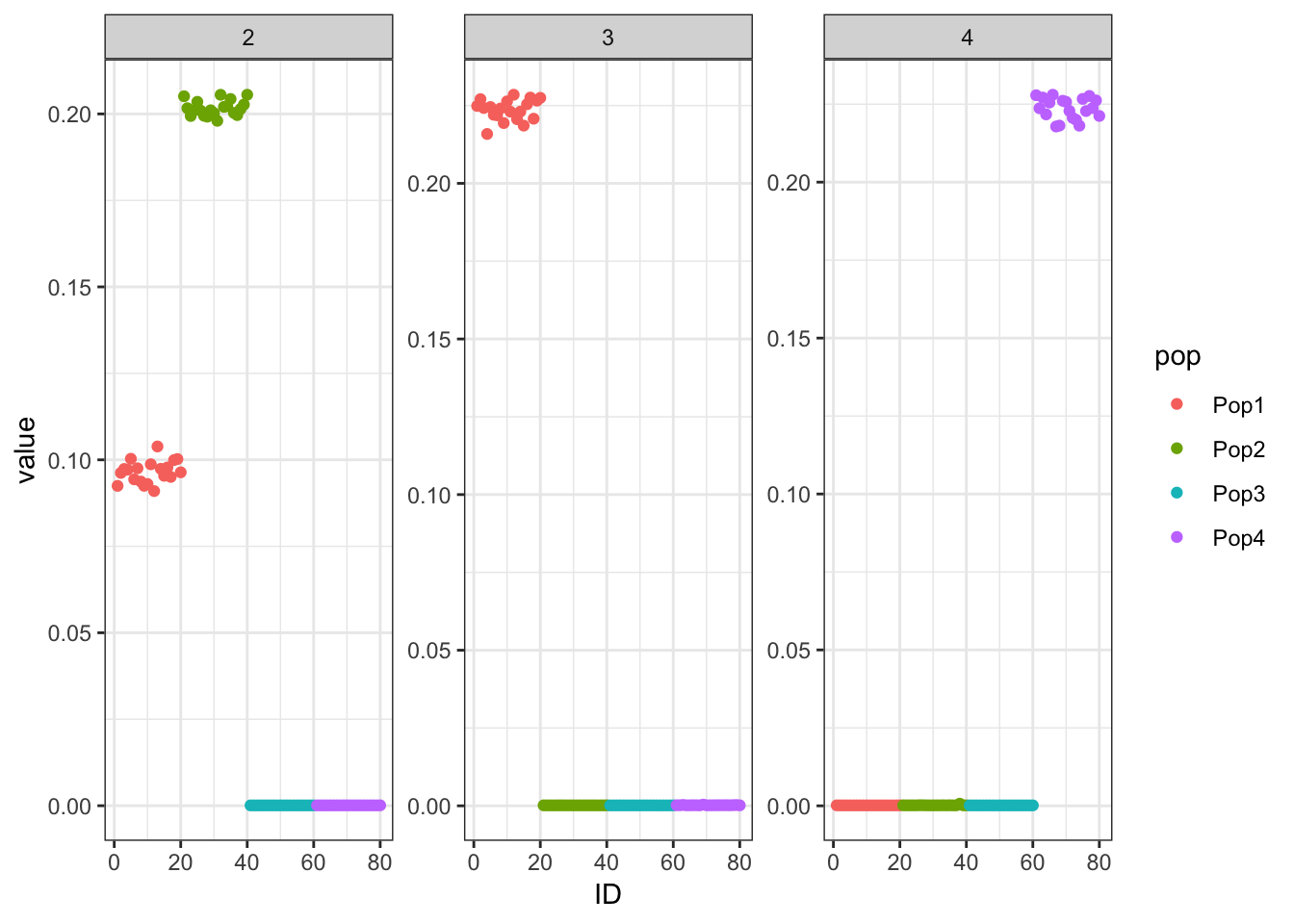

FLASH

Here I run FLASH with no sign constraints on the loadings or factors:

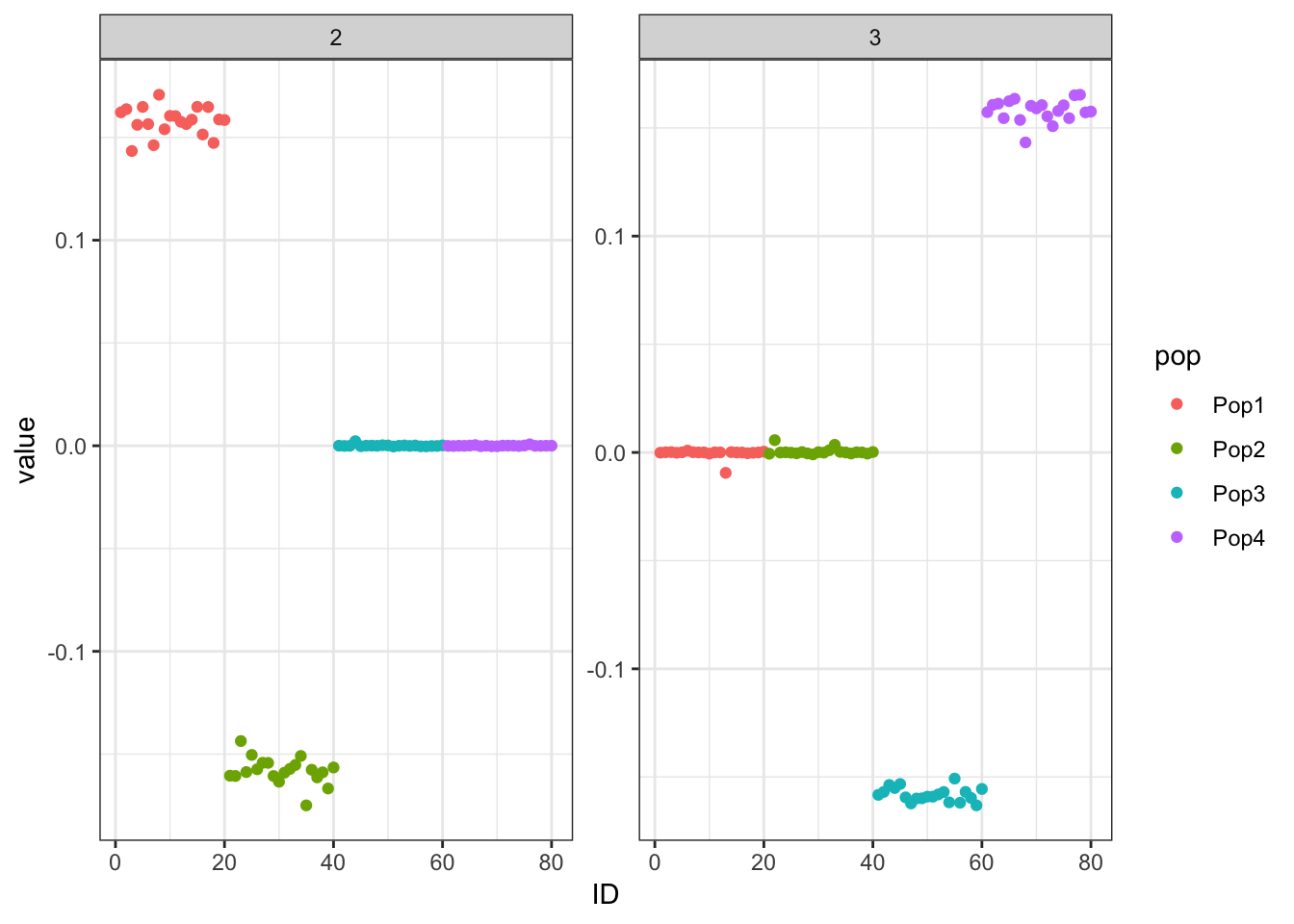

Mean Center Greedy

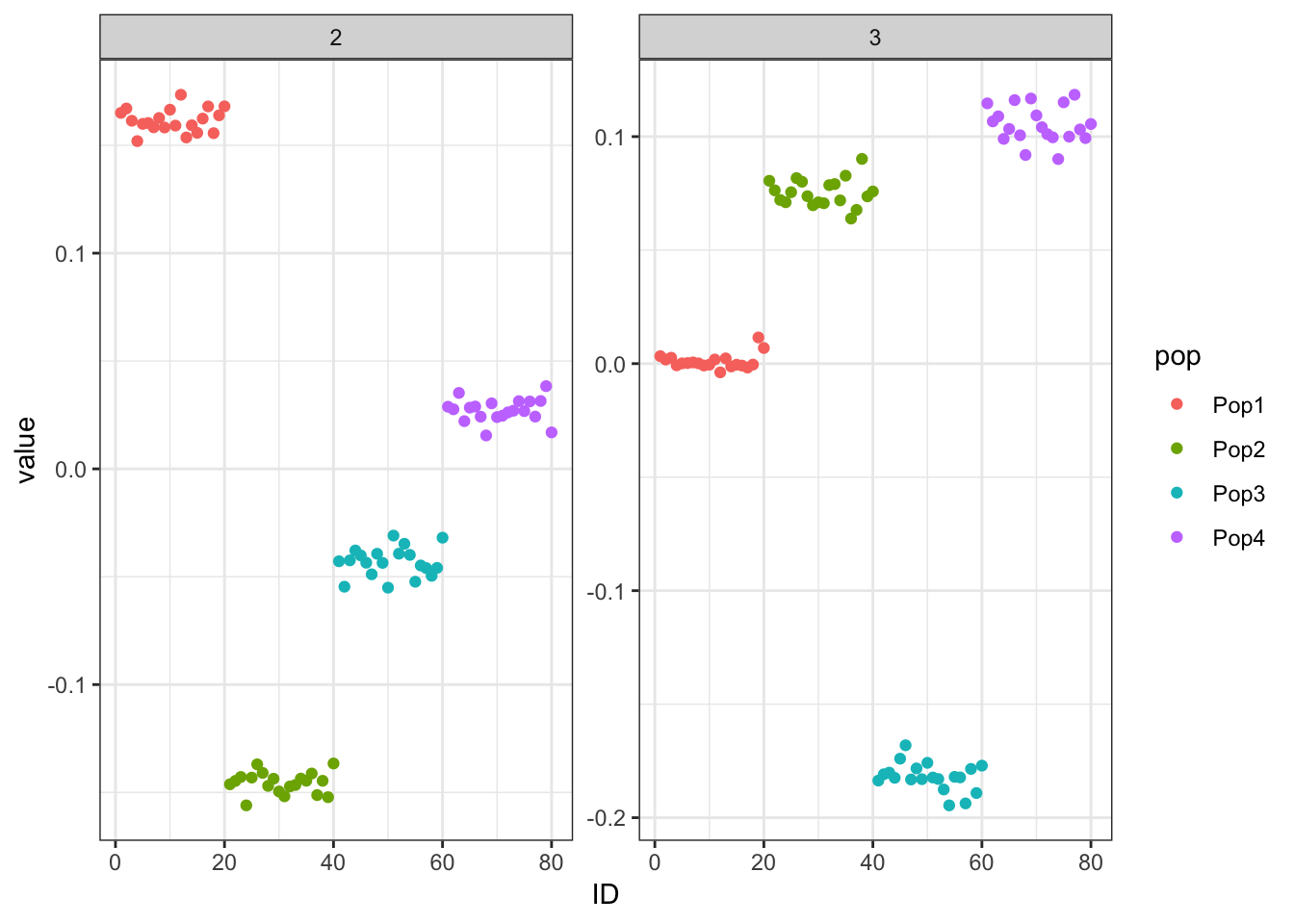

Here I mean center the data matrix before running greedy FLASH:

flash_fit = flashier::flashier(Y_c,

greedy.Kmax=K,

prior.type=c("normal.mixture", "point.normal"),

ebnm.param=list(fixg=TRUE, g=list(pi0 = 0, a=1, mu=0)),

var.type=0)Initializing flash object...

Adding factor 1 to flash object...

Adding factor 2 to flash object...

Adding factor 3 to flash object...

Adding factor 4 to flash object...

Factor doesn't increase objective and won't be added.

Nullchecking 3 factors...

Wrapping up...

Done.p_res = plot_flash_loadings(flash_fit, n_per_pop)

print(p_res$p1)

print(p_res$p2)

print(flash_fit$objective)[1] -777672.7Here we get a pretty nice solution similar to PCA but with a more interpretable sparsity pattern. K1 cleanly represents the first split in the tree and K2 and K3 cleanly represent the subsequent splits.

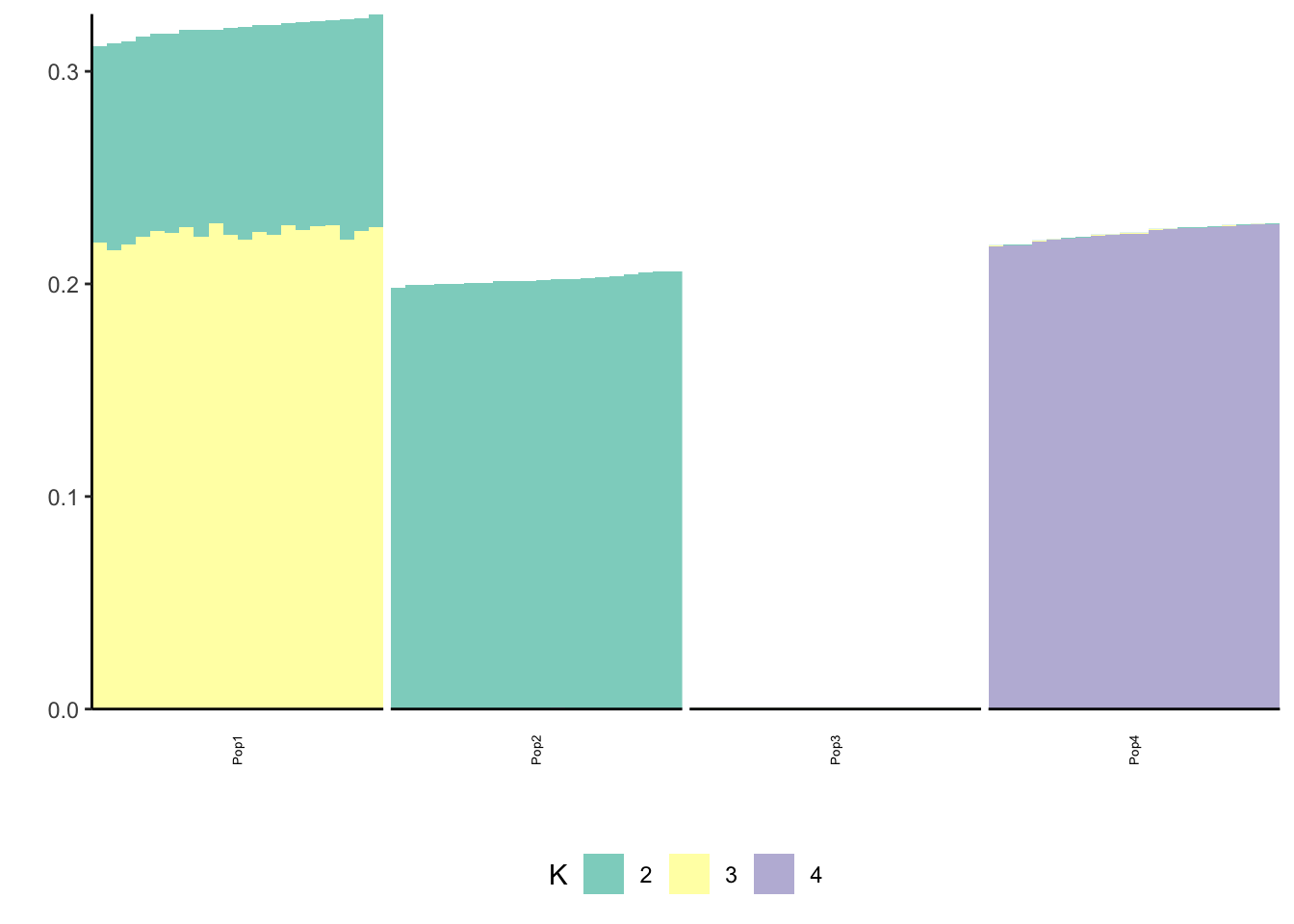

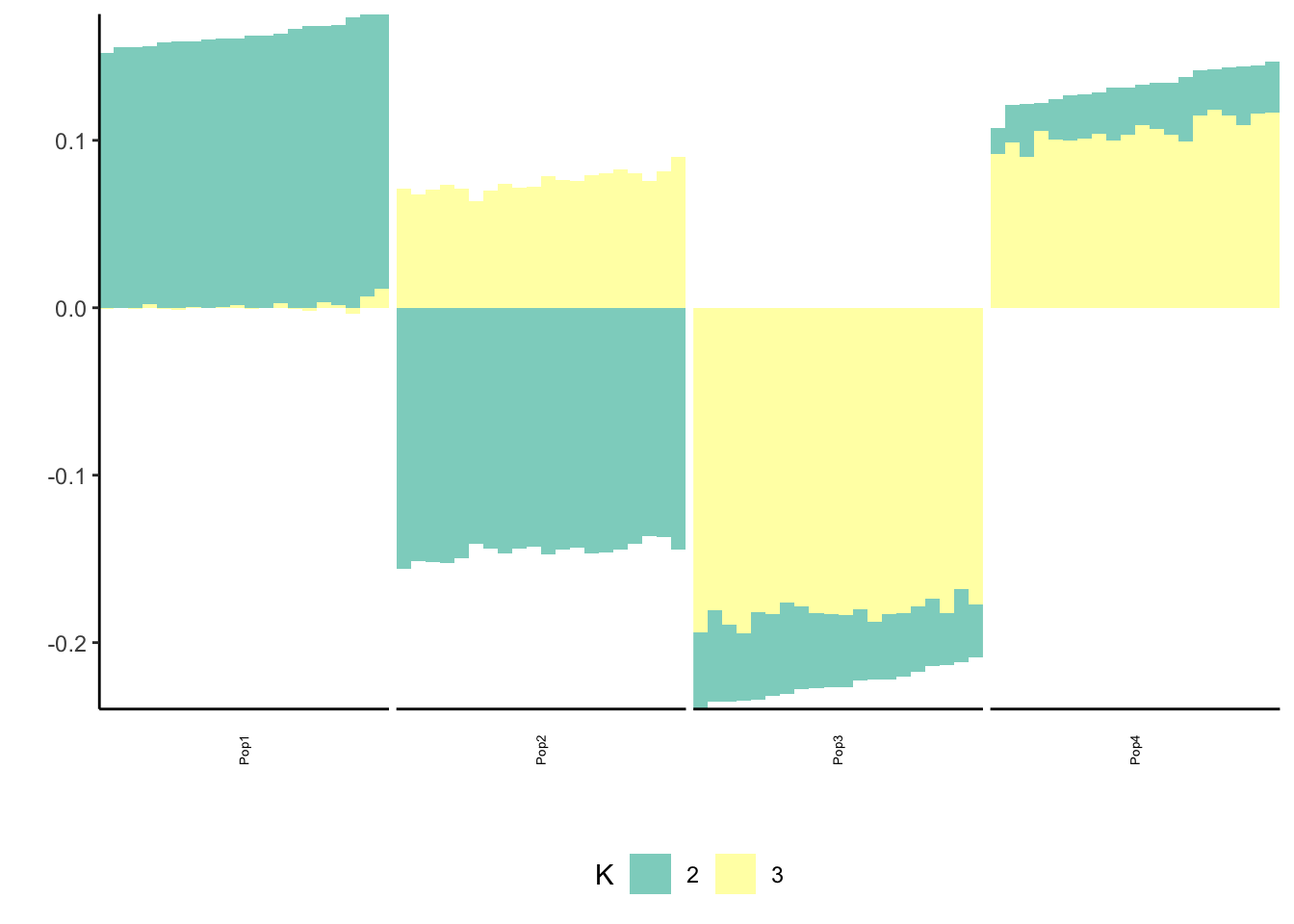

Mean Center Final Backfit

Here I mean center the data matrix before running greedy FLASH with a final backfit:

flash_fit = flashier::flashier(Y_c,

flash.init=flash_fit,

prior.type=c("normal.mixture", "point.normal"),

ebnm.param=list(fixg=TRUE, g=list(pi0 = 0, a=1, mu=0)),

var.type=0,

backfit = "final",

backfit.order = "dropout",

backfit.reltol = 10)Initializing flash object...

Adding factor 4 to flash object...

Factor doesn't increase objective and won't be added.

Backfitting 3 factors...

Nullchecking 3 factors...

Wrapping up...

Done.p_res = plot_flash_loadings(flash_fit, n_per_pop)

print(p_res$p1)

print(p_res$p2)

print(flash_fit$objective)[1] -777635.4This solution looks very similar to the greedy solution.

Mean Center Alternating Backfit

Here I mean center the data matrix before running greedy FLASH with alternating backfits:

flash_fit = flashier::flashier(Y_c,

greedy.Kmax=K,

prior.type=c("normal.mixture", "point.normal"),

ebnm.param=list(fixg=TRUE, g=list(pi0 = 0, a=1, mu=0)),

var.type=0,

backfit = "alternating",

backfit.order = "dropout",

backfit.reltol = 10)Initializing flash object...

Adding factor 1 to flash object...

Adding factor 2 to flash object...

Backfitting 2 factors...

Adding factor 3 to flash object...

Backfitting 3 factors...

Adding factor 4 to flash object...

Factor doesn't increase objective and won't be added.

Nullchecking 3 factors...

Wrapping up...

Done.p_res = plot_flash_loadings(flash_fit, n_per_pop)

print(p_res$p1)

print(p_res$p2)

print(flash_fit$objective)[1] -777635.4This solution also looks very similar to the greedy / final back solutions.

Convex-NMF

I implemented convex non-negative matrix factorization from Ding et al 2012 see code/nmf.R. I wanted to see if the greedy approach using another nmf algorithm would lead to a similar result to semi-negative FLASH:

Greedy

Z = t(Y)

K = 6

Znew = Z

L = matrix(NA, nrow=n, ncol=K)

for(k in 1:K){

print(paste0("Running K=", k))

res = convex_nmf(Znew, 1, init="kmeans", n_iter=5000, eps=1e-4, n_print=200)

G = res$G

F = res$F

L[,k] = G[,1]

Znew = Znew - res$Xhat

}[1] "Running K=1"

[1] "iteration=200 | delta_rss=0.000307712412904948"

[1] "Running K=2"

[1] "Running K=3"

[1] "iteration=200 | delta_rss=0.00267128960695118"

[1] "iteration=400 | delta_rss=0.000111632572952658"

[1] "Running K=4"

[1] "iteration=200 | delta_rss=0.0176454830216244"

[1] "iteration=400 | delta_rss=0.00117226445581764"

[1] "iteration=600 | delta_rss=0.000648038927465677"

[1] "iteration=800 | delta_rss=0.000413901463616639"

[1] "iteration=1000 | delta_rss=0.000318053993396461"

[1] "iteration=1200 | delta_rss=0.000316710502374917"

[1] "iteration=1400 | delta_rss=0.000341458071488887"

[1] "iteration=1600 | delta_rss=0.000334837124682963"

[1] "iteration=1800 | delta_rss=0.000307496055029333"

[1] "iteration=2000 | delta_rss=0.000276727427262813"

[1] "iteration=2200 | delta_rss=0.000246031733695418"

[1] "iteration=2400 | delta_rss=0.000216541695408523"

[1] "iteration=2600 | delta_rss=0.000189068436156958"

[1] "iteration=2800 | delta_rss=0.000164072669576854"

[1] "iteration=3000 | delta_rss=0.00014174246462062"

[1] "iteration=3200 | delta_rss=0.000122082477901131"

[1] "iteration=3400 | delta_rss=0.000104979786556214"

[1] "Running K=5"

[1] "iteration=200 | delta_rss=0.00040846667252481"

[1] "Running K=6"l_df = data.frame(L)

colnames(l_df) = paste0("K", 1:K)

l_df$iid = 1:n

l_df$pop = c(rep("Pop1", n_per_pop), rep("Pop2", n_per_pop),

rep("Pop3", n_per_pop), rep("Pop4", n_per_pop))

gath_l_df = l_df %>% gather(K, value, -iid, -pop)

p = ggplot(gath_l_df, aes(x=iid, y=value, color=pop)) +

geom_point() +

facet_wrap(K~., scale="free") +

theme_bw()

print(p)

The first factor looks like the mean then the following two look similar to greedy FLASH. The following 3 are less interpretable.

Greedy (remove ancestral mean)

Z = t(Y) - (2*sim$p_a)

K = 6

Znew = Z

L = matrix(NA, nrow=n, ncol=K)

for(k in 1:K){

print(paste0("Running K=", k))

res = convex_nmf(Znew, 1, init="kmeans", n_iter=5000, eps=1e-4, n_print=200)

G = res$G

F = res$F

L[,k] = G[,1]

Znew = Znew - res$Xhat

}[1] "Running K=1"

[1] "iteration=200 | delta_rss=0.038165882404428"

[1] "Running K=2"

[1] "Running K=3"

[1] "iteration=200 | delta_rss=0.0193374897935428"

[1] "iteration=400 | delta_rss=0.00135371158830822"

[1] "iteration=600 | delta_rss=0.000302112894132733"

[1] "Running K=4"

[1] "iteration=200 | delta_rss=0.00983678991906345"

[1] "iteration=400 | delta_rss=0.00161215773550794"

[1] "iteration=600 | delta_rss=0.00111764576286077"

[1] "iteration=800 | delta_rss=0.000982423196546733"

[1] "iteration=1000 | delta_rss=0.000902152387425303"

[1] "iteration=1200 | delta_rss=0.000837642524857074"

[1] "iteration=1400 | delta_rss=0.000780722009949386"

[1] "iteration=1600 | delta_rss=0.000729036459233612"

[1] "iteration=1800 | delta_rss=0.000681623059790581"

[1] "iteration=2000 | delta_rss=0.000637932971585542"

[1] "iteration=2200 | delta_rss=0.000597573525737971"

[1] "iteration=2400 | delta_rss=0.000560227606911212"

[1] "iteration=2600 | delta_rss=0.000525624142028391"

[1] "iteration=2800 | delta_rss=0.000493524828925729"

[1] "iteration=3000 | delta_rss=0.000463717442471534"

[1] "iteration=3200 | delta_rss=0.000436011527199298"

[1] "iteration=3400 | delta_rss=0.000410235545132309"

[1] "iteration=3600 | delta_rss=0.000386234081815928"

[1] "iteration=3800 | delta_rss=0.000363866449333727"

[1] "iteration=4000 | delta_rss=0.000343004532624036"

[1] "iteration=4200 | delta_rss=0.000323532032780349"

[1] "iteration=4400 | delta_rss=0.000305342546198517"

[1] "iteration=4600 | delta_rss=0.000288339098915458"

[1] "iteration=4800 | delta_rss=0.000272432982455939"

[1] "iteration=5000 | delta_rss=0.000257542997132987"

[1] "Running K=5"

[1] "iteration=200 | delta_rss=0.00570639106445014"

[1] "iteration=400 | delta_rss=0.00183307699626312"

[1] "iteration=600 | delta_rss=0.00145148171577603"

[1] "iteration=800 | delta_rss=0.00125598645536229"

[1] "iteration=1000 | delta_rss=0.00110427790787071"

[1] "iteration=1200 | delta_rss=0.000977315939962864"

[1] "iteration=1400 | delta_rss=0.00086888181976974"

[1] "iteration=1600 | delta_rss=0.000775410851929337"

[1] "iteration=1800 | delta_rss=0.000694358721375465"

[1] "iteration=2000 | delta_rss=0.000623753992840648"

[1] "iteration=2200 | delta_rss=0.000562010216526687"

[1] "iteration=2400 | delta_rss=0.000507824996020645"

[1] "iteration=2600 | delta_rss=0.00046011689119041"

[1] "iteration=2800 | delta_rss=0.000417980481870472"

[1] "iteration=3000 | delta_rss=0.000380654237233102"

[1] "iteration=3200 | delta_rss=0.000347494380548596"

[1] "iteration=3400 | delta_rss=0.000317954632919282"

[1] "iteration=3600 | delta_rss=0.000291570089757442"

[1] "iteration=3800 | delta_rss=0.000267943425569683"

[1] "iteration=4000 | delta_rss=0.000246734241954982"

[1] "iteration=4200 | delta_rss=0.000227649812586606"

[1] "iteration=4400 | delta_rss=0.000210437865462154"

[1] "iteration=4600 | delta_rss=0.000194880121853203"

[1] "iteration=4800 | delta_rss=0.000180787581484765"

[1] "iteration=5000 | delta_rss=0.000167995691299438"

[1] "Running K=6"

[1] "iteration=200 | delta_rss=0.00401343387784436"

[1] "iteration=400 | delta_rss=0.000489717465825379"

[1] "iteration=600 | delta_rss=0.000220007786992937"

[1] "iteration=800 | delta_rss=0.000161810661666095"

[1] "iteration=1000 | delta_rss=0.000139431154821068"

[1] "iteration=1200 | delta_rss=0.00012649194104597"

[1] "iteration=1400 | delta_rss=0.000116988958325237"

[1] "iteration=1600 | delta_rss=0.000109126151073724"

[1] "iteration=1800 | delta_rss=0.000102238438557833"l_df = data.frame(L)

colnames(l_df) = paste0("K", 1:K)

l_df$iid = 1:n

l_df$pop = c(rep("Pop1", n_per_pop), rep("Pop2", n_per_pop),

rep("Pop3", n_per_pop), rep("Pop4", n_per_pop))

gath_l_df = l_df %>% gather(K, value, -iid, -pop)

p = ggplot(gath_l_df, aes(x=iid, y=value, color=pop)) +

geom_point() +

facet_wrap(K~., scale="free") +

theme_bw()

print(p)

This has a similar solution to greedy semi-negative FLASH except the first factor is way less sparse and the other factors are less sparse in general. This makes it clear how important it is to estimate the mean parameter in the model correctly.

Admixture Graph Simulation (in progress)

Here I simulate under an admixture event on the tree from population 2 to population 3 by setting the admixture weight \(w=0.4\). I simulate 10 individuals per population and 10,000 SNPs:

set.seed(1990)

# number of individuals per pop

n_per_pop = 20

# set w = 0.0 to just simulate from a tree

sim = simple_graph_simulation(w=0.4, c1=.5, c2=.5, p=10000, n_per_pop=n_per_pop)

# data matrix

Y = sim$Y

# centered data matrix

Y_c = scale(Y, center=TRUE, scale=FALSE)

# centered scaled data matrix

Y_cs = scale(Y, center=TRUE, scale=TRUE)

# number of individuals

n = nrow(Y)

# number of SNPs

p = ncol(Y)

# number of factors

K = 20PCA

Here I apply PCA to the centered and scaled genotype matrix:

svd_fit = lfa:::trunc.svd(Y_cs, K)

lamb = svd_fit$d^2

p = qplot(1:K, lamb / sum(lamb)) +

xlab("PC") +

ylab("PVE") +

theme_bw()

print(p)

| Version | Author | Date |

|---|---|---|

| 1a21d43 | jhmarcus | 2019-04-17 |

From the PVE plot we can see there is a large drop off after the first 3 PCs so lets just visualize them:

l_df = data.frame(svd_fit$u[,1:3])

colnames(l_df) = paste0("PC", 1:3)

l_df$iid = 1:n

l_df$pop = c(rep("Pop1", n_per_pop), rep("Pop2", n_per_pop),

rep("Pop3", n_per_pop), rep("Pop4", n_per_pop))

gath_l_df = l_df %>% gather(PC, value, -iid, -pop)

p = ggplot(gath_l_df, aes(x=iid, y=value, color=pop)) +

geom_point() +

facet_wrap(PC~., scale="free") +

theme_bw()

print(p)

| Version | Author | Date |

|---|---|---|

| 1a21d43 | jhmarcus | 2019-04-17 |

There is some signal of the admixture event in PC1 … we see a shift of population 2 to 0.0 in PC1 rather than being cluster with population 4.

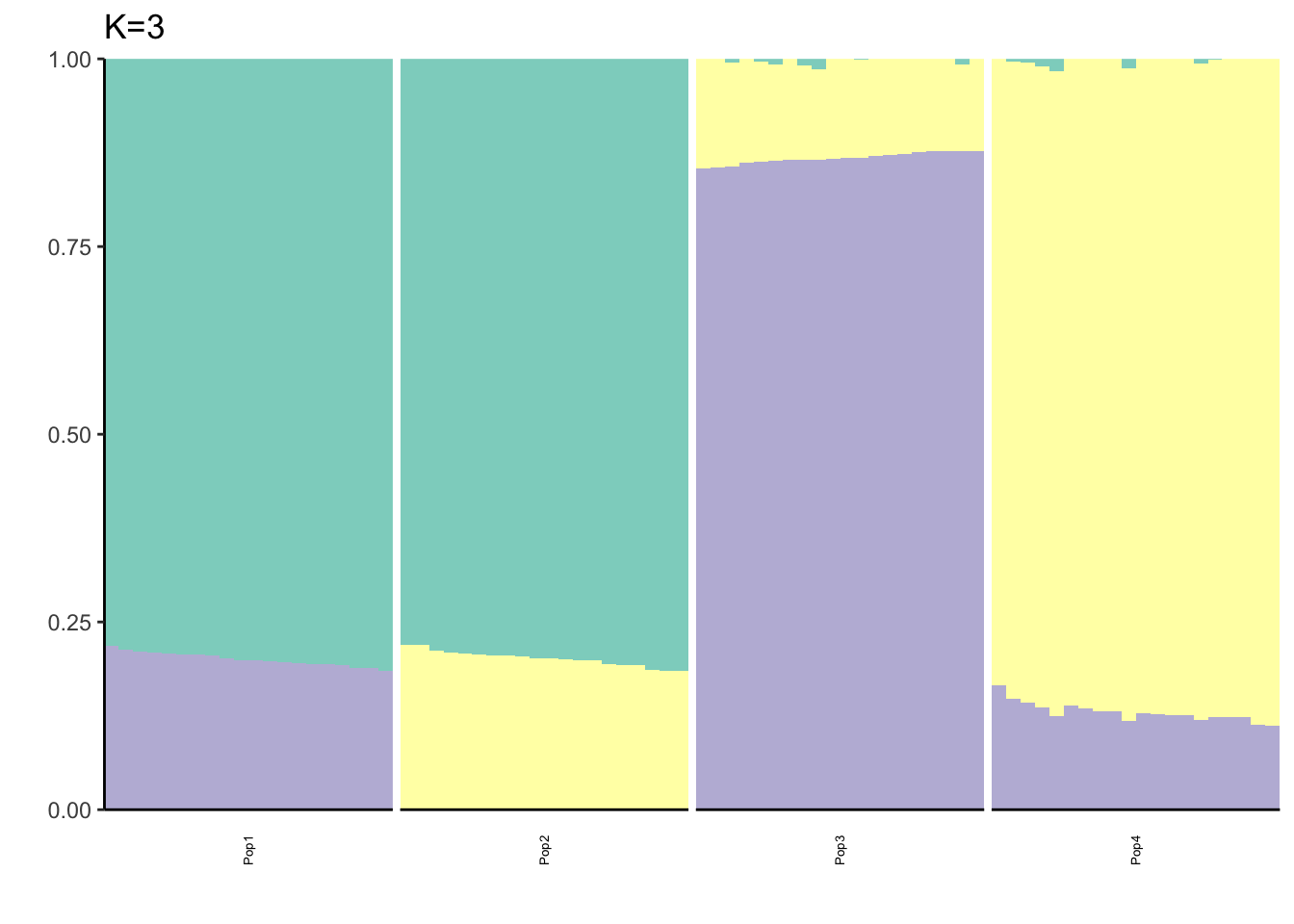

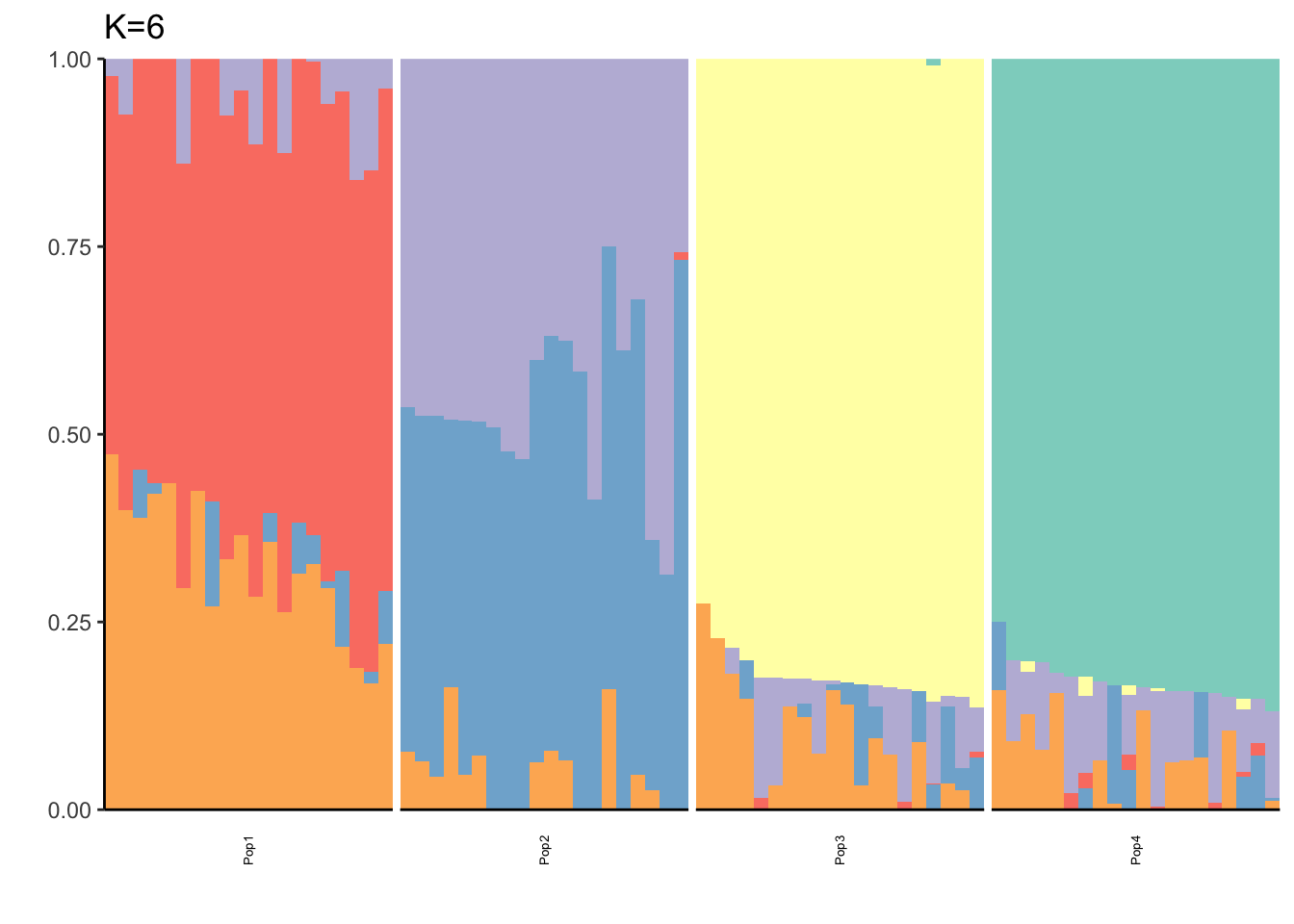

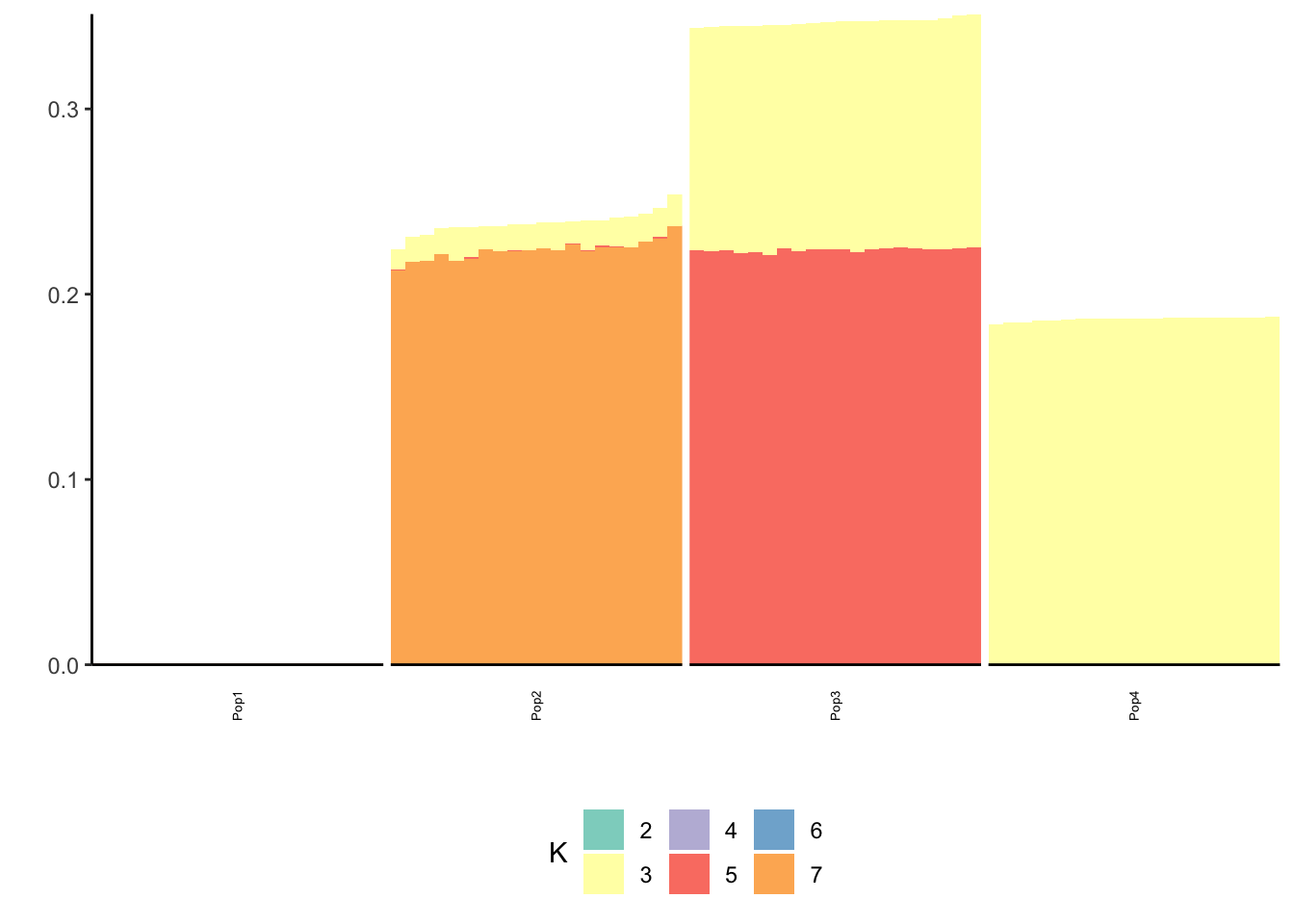

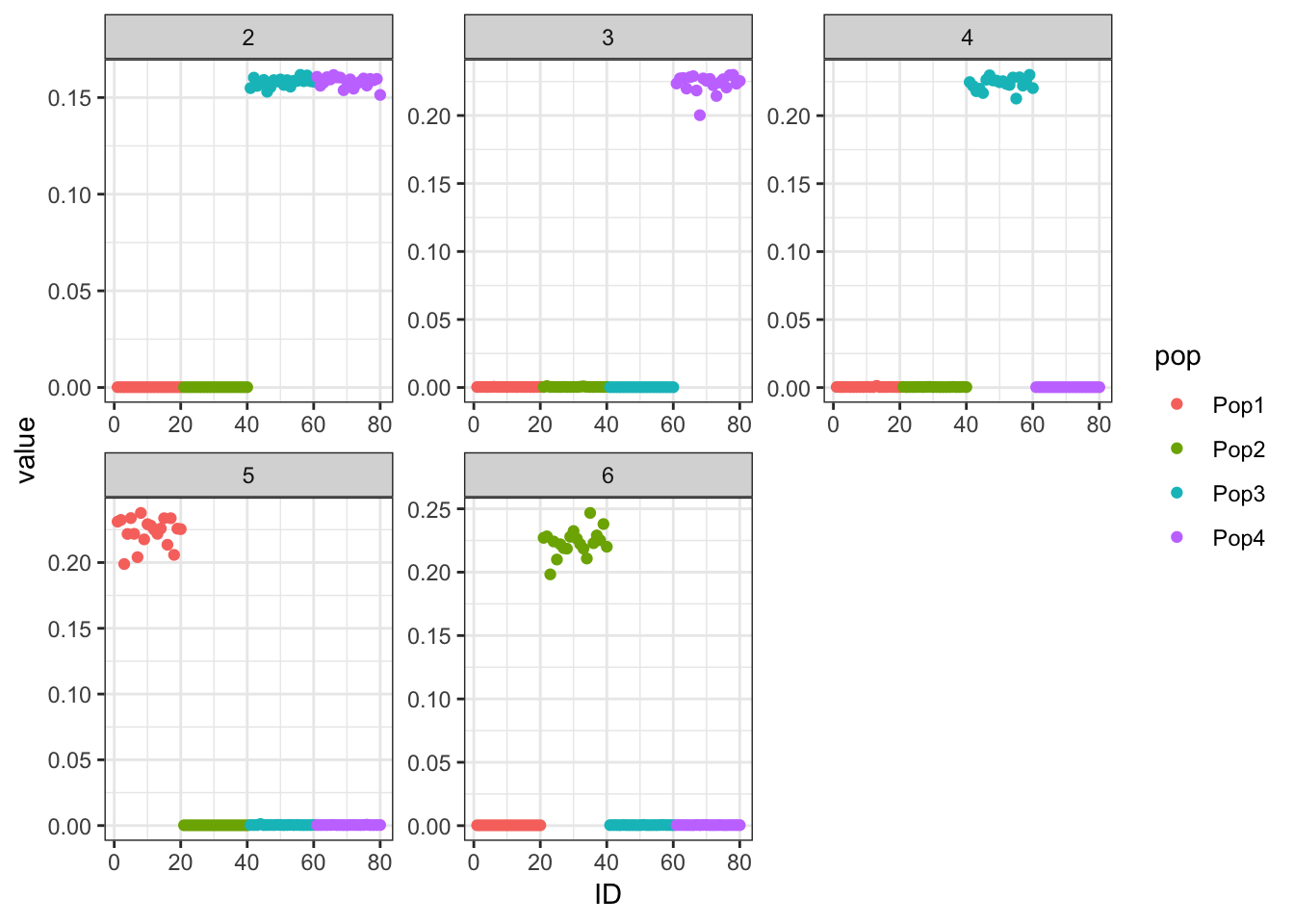

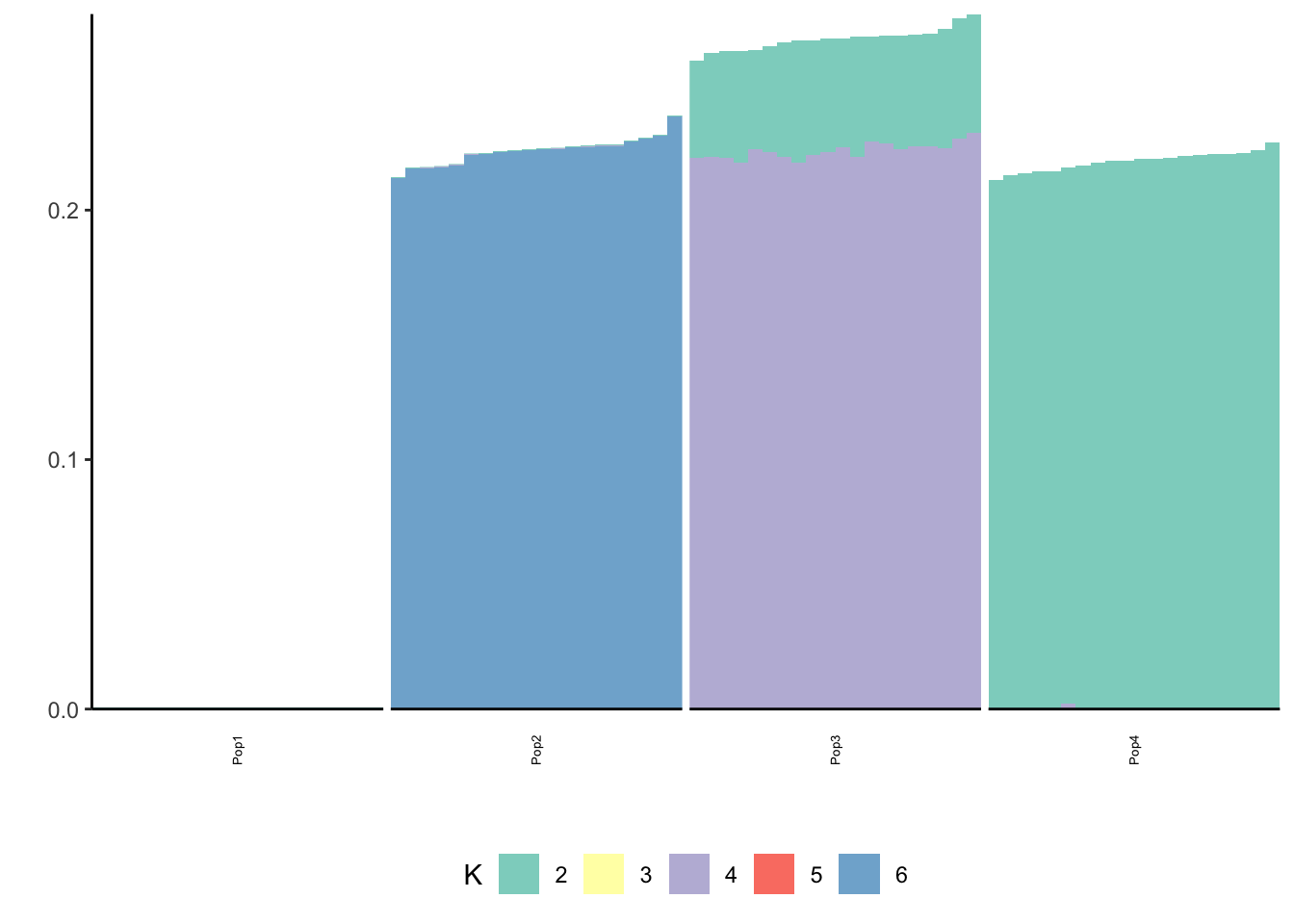

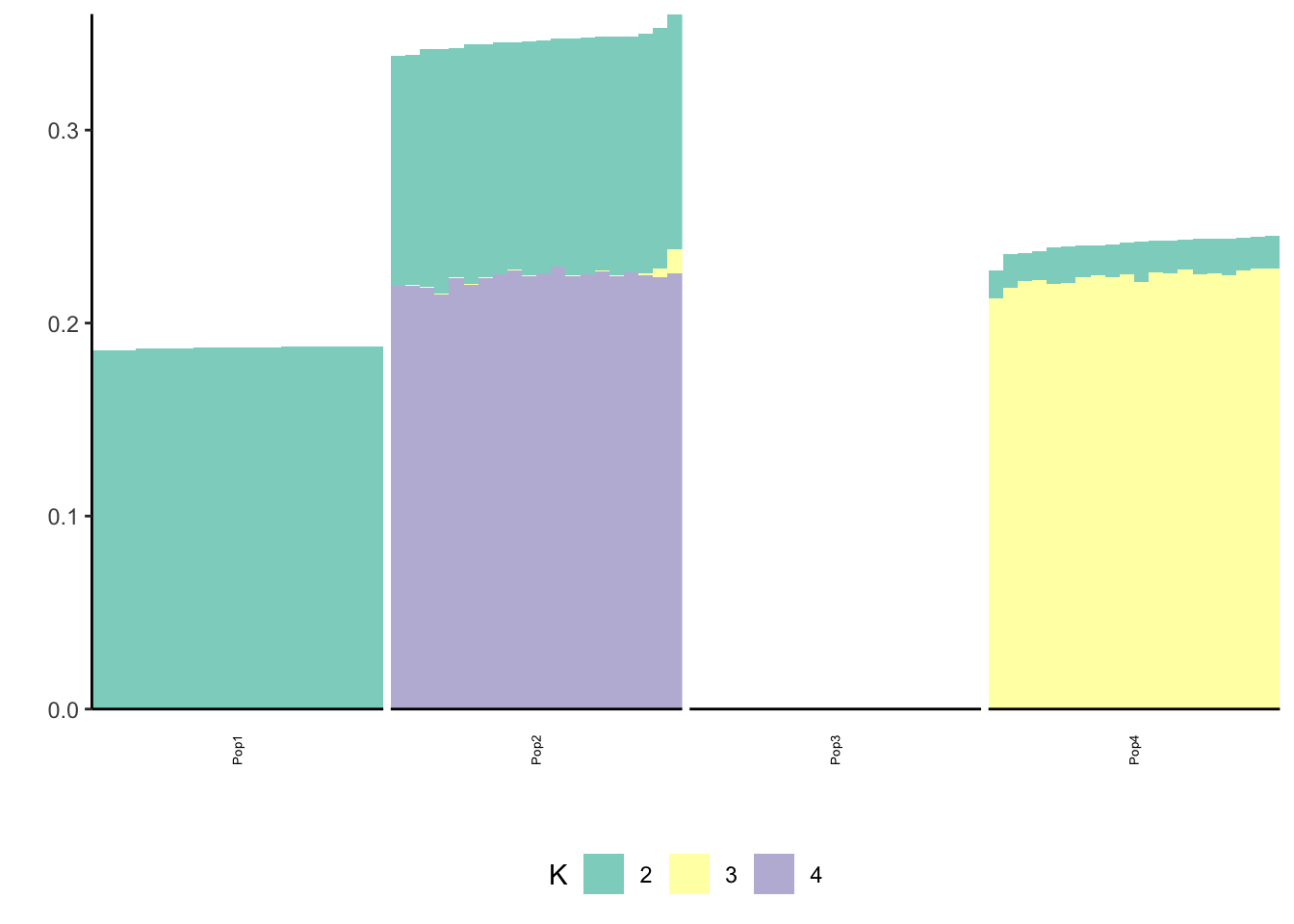

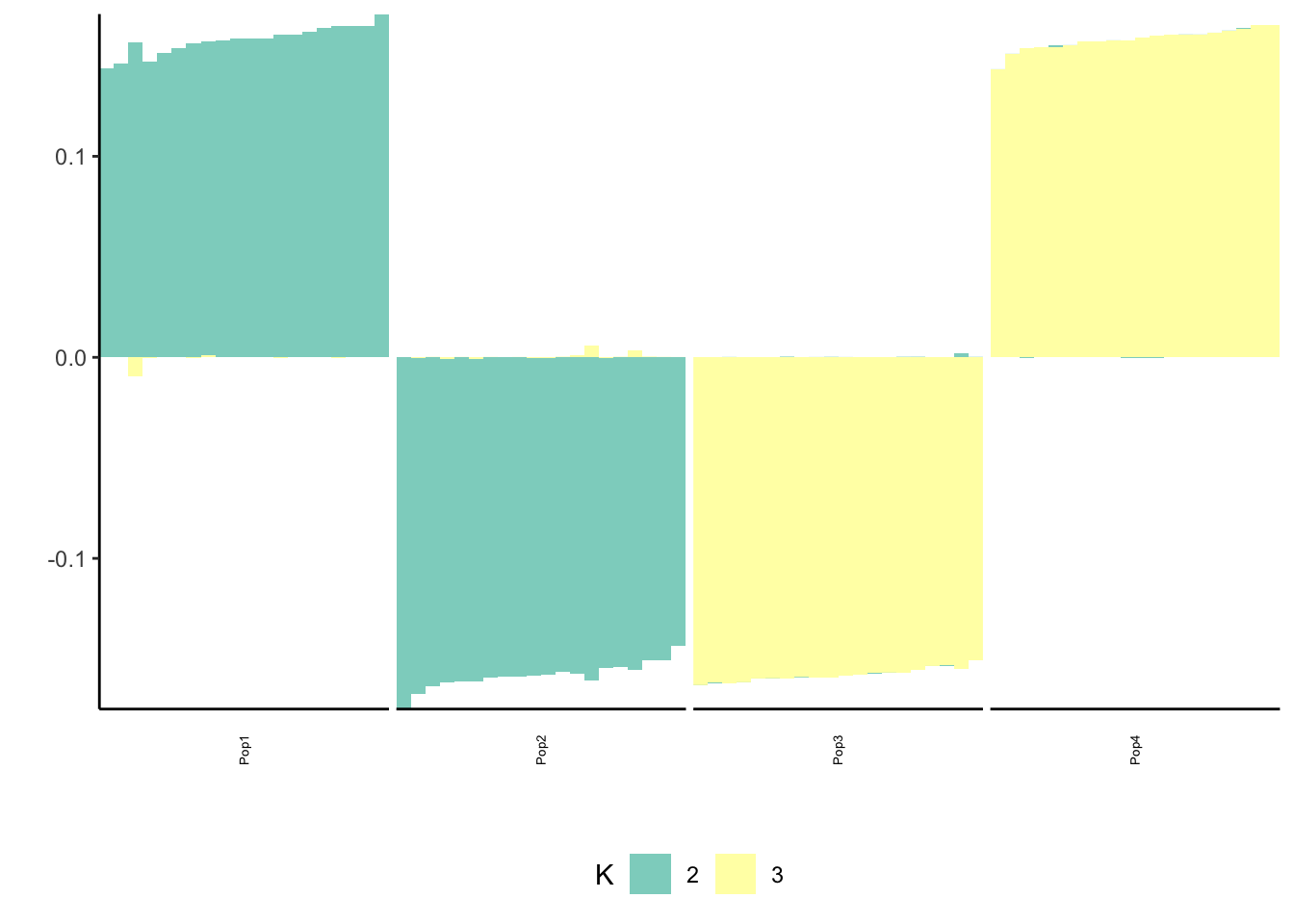

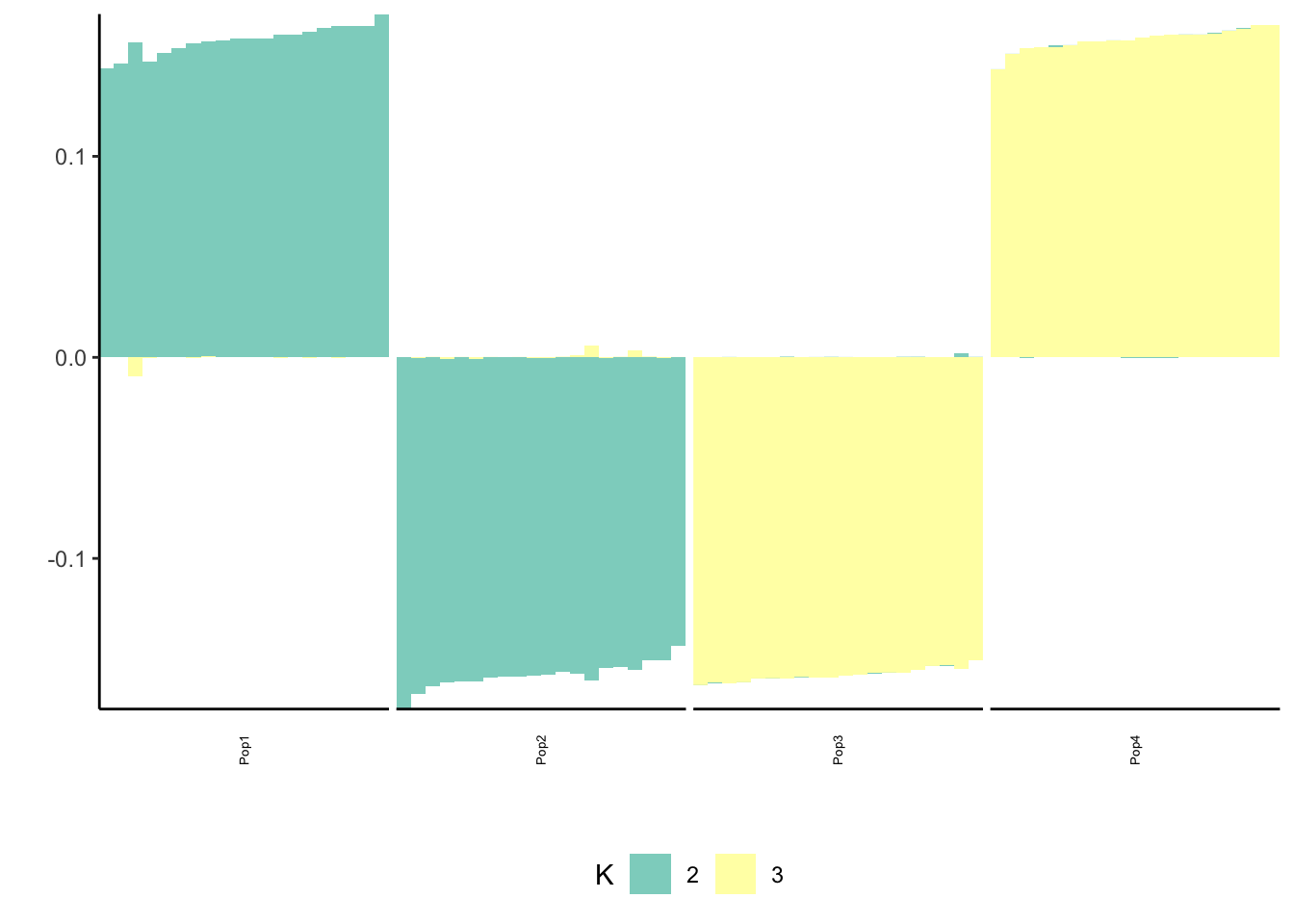

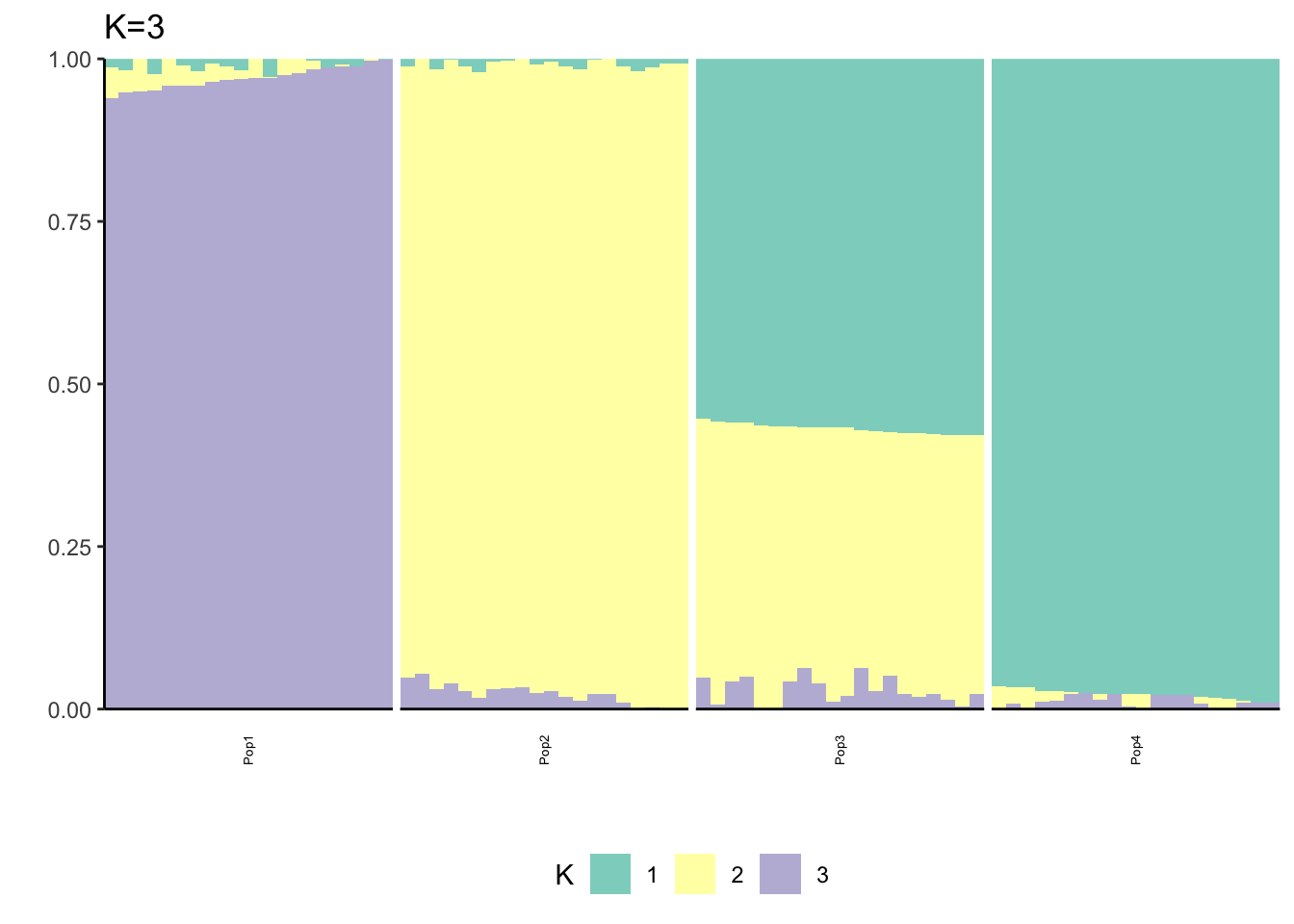

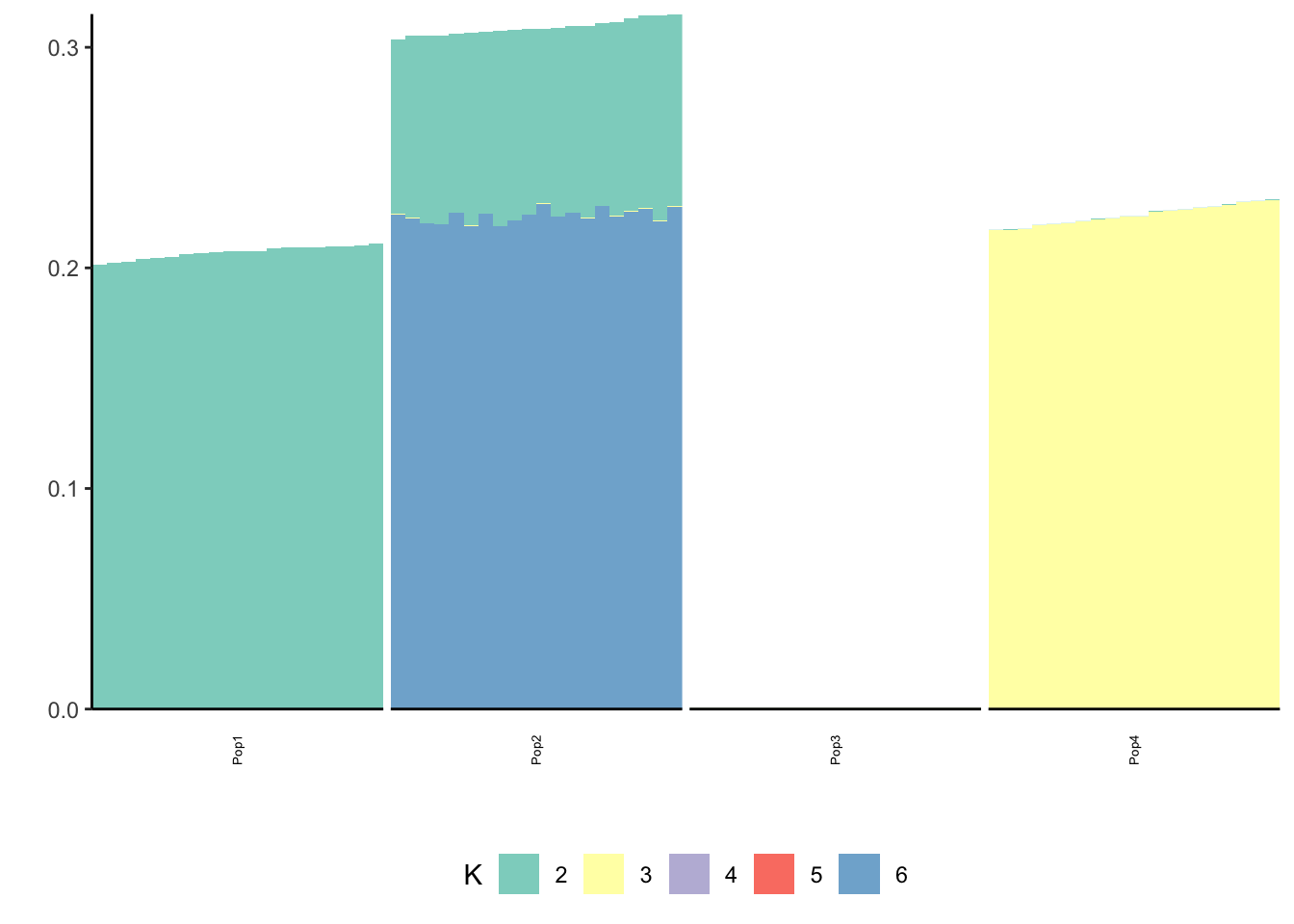

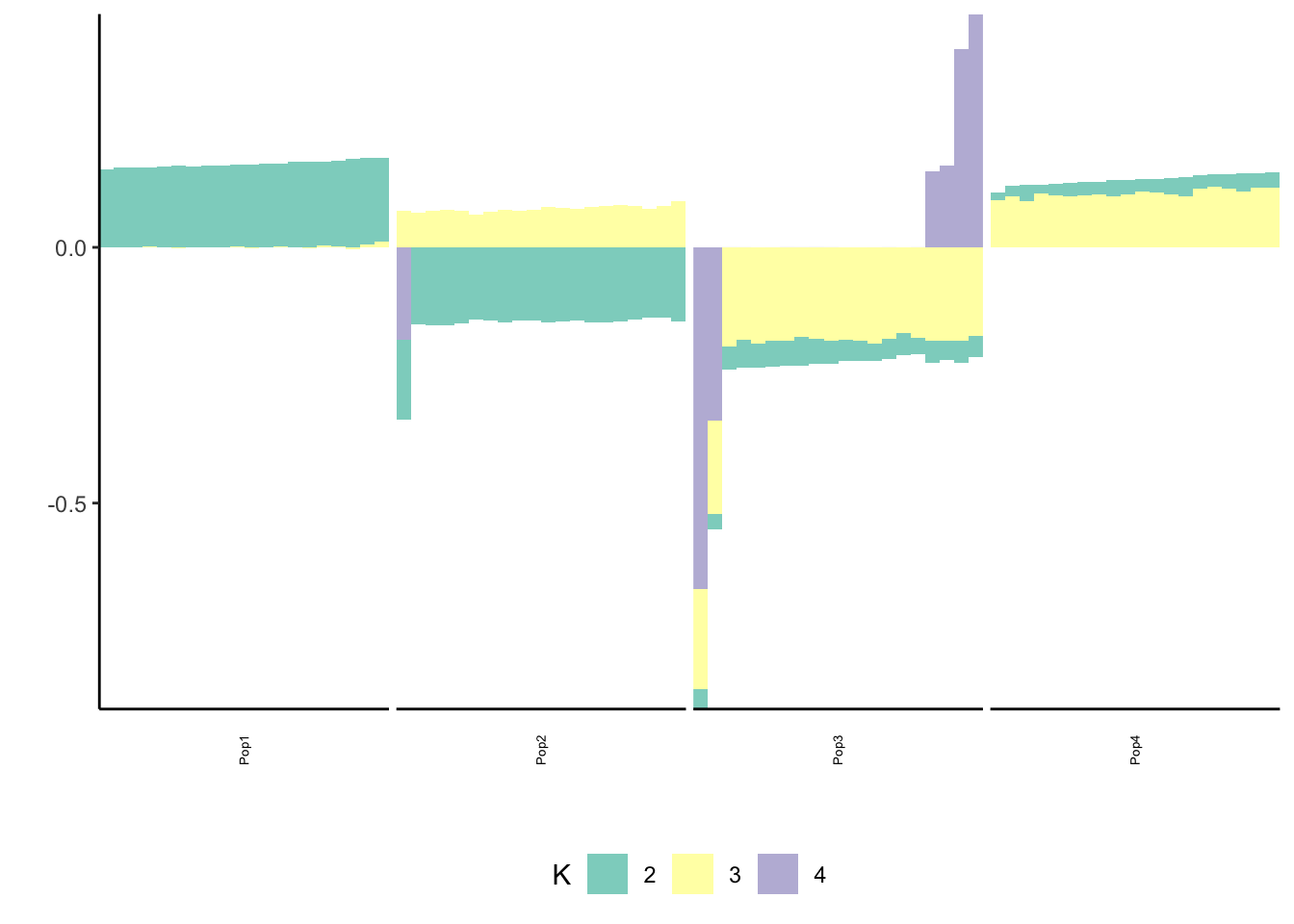

PSD (alstructure)

Here I fit the Pritchard, Stephens, and Donnelly model using alstructure for K=2,…,6:

K = 6

for(k in 2:K){

al_fit = alstructure(t(Y), d_hat = k)

Q = t(al_fit$Q_hat)

l_df = as.data.frame(Q)

colnames(l_df) = 1:k

l_df$ID = 1:n

l_df$pop = c(rep("Pop1", n_per_pop), rep("Pop2", n_per_pop),

rep("Pop3", n_per_pop), rep("Pop4", n_per_pop))

gath_l_df = l_df %>% gather(K, value, -ID, -pop)

p = structure_plot(gath_l_df,

colset="Set3",

facet_grp="pop",

facet_levels=paste0("Pop", 1:4),

fact_type="structure",

keep_leg=FALSE) +

ggtitle(paste0("K=", k))

print(p)

}

Here maybe \(K=3\) is the most interpretable? We see some signature of the split and admixture? This is already showing the difficulty of interpreting PSD fits in simple population genetic model. It also emphasizes thats the admixture gallery of many K plots needs to be shown to really understand what is going on not just a single one.

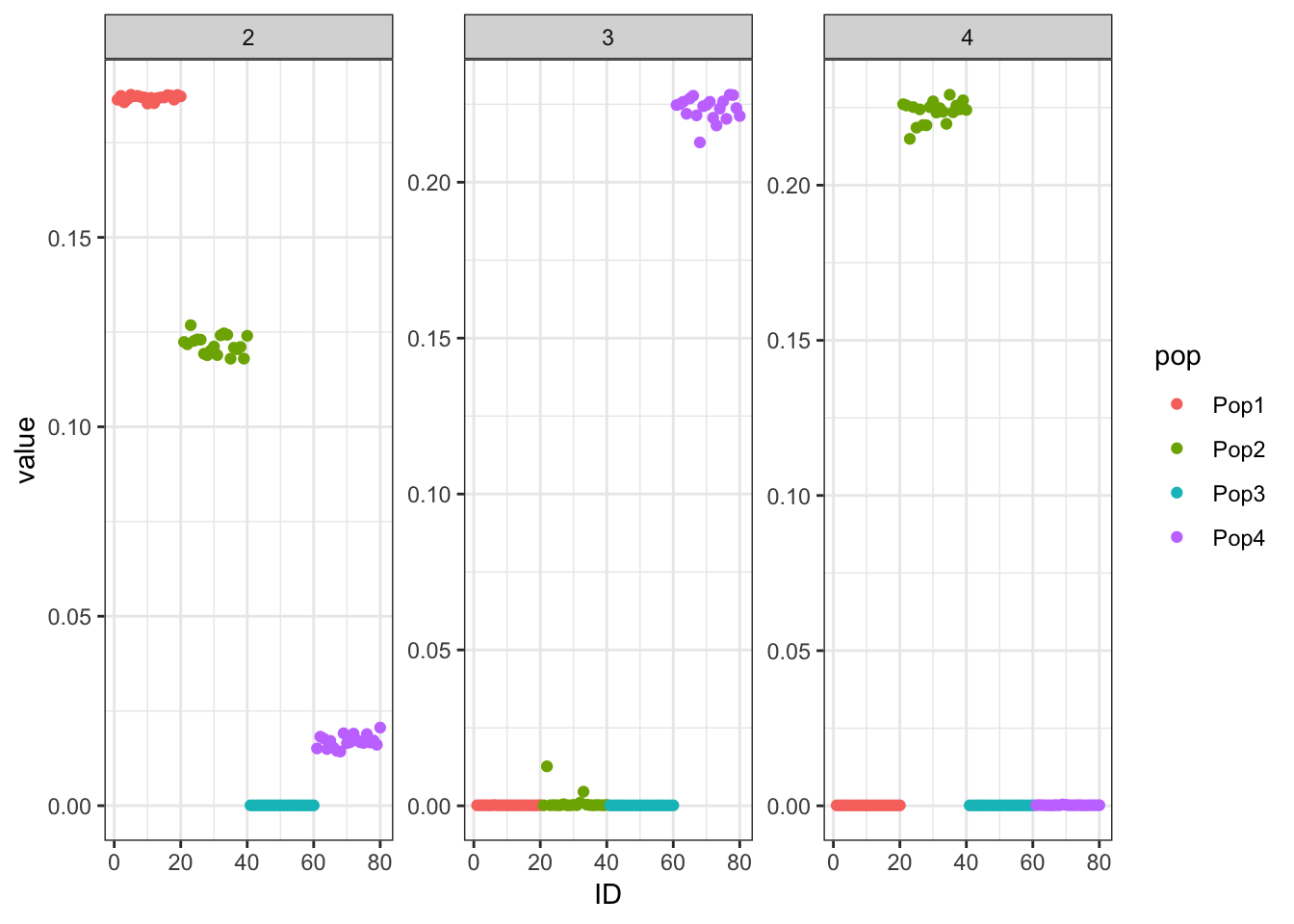

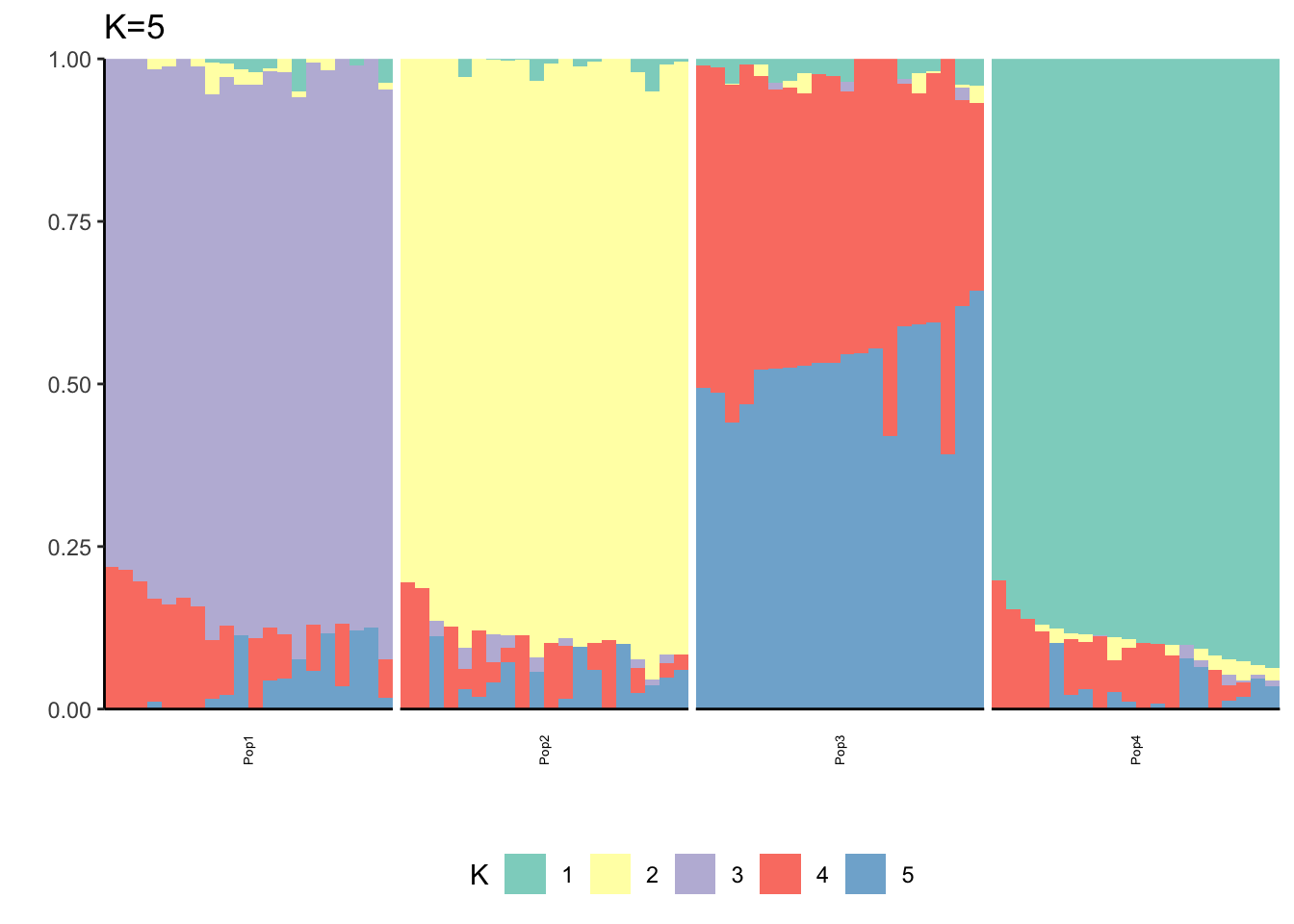

FLASH (Drift)

Here I apply Empirical Bayes matrix factorization with non-negative loadings and unconstrained factors:

Fix Loadings Greedy

Here I fix the first loadings vector to the 1 vector and only run the greedy algorithm:

flash_fit = flashier::flashier(Y,

greedy.Kmax=K,

prior.type=c("nonnegative", "point.normal"),

ebnm.param=list(fixg=TRUE, g=list(pi0 = 0, a=1, mu=0)),

var.type=0,

fix.dim=list(1),

fix.idx=list(1:n),

fix.vals=list(rep(1, n))) Initializing flash object...

Adding factor 1 to flash object...

Adding factor 2 to flash object...

Adding factor 3 to flash object...

Adding factor 4 to flash object...

Adding factor 5 to flash object...

Adding factor 6 to flash object...

Adding factor 7 to flash object...

Factor doesn't increase objective and won't be added.

Nullchecking 5 factors...

Wrapping up...

Done.p_res = plot_flash_loadings(flash_fit, n_per_pop)

print(p_res$p1)

print(p_res$p2)

print(flash_fit$objective)[1] -832533.9Hmm we are missing the population specific factor for population 4? We also see population 3 not being as strongly loaded on factor 3 which maybe is a signature of the admixture event?

Fix Loadings Final Backfit

Here I fix the first loadings vector to the 1 vector, run the greedy algorithm to pick out \(K\) from the data and then run a final backfit to clean up the greedy solution:

flash_fit = flashier::flashier(Y,

flash.init = flash_fit,

prior.type=c("nonnegative", "point.normal"),

ebnm.param=list(fixg=TRUE, g=list(pi0 = 0, a=1, mu=0)),

var.type=0,

fix.dim=list(1),

fix.idx=list(1:n),

fix.vals=list(rep(1, n)),

backfit="final",

backfit.order="dropout",

backfit.reltol=10)Initializing flash object...

Adding factor 7 to flash object...

Factor doesn't increase objective and won't be added.

Backfitting 6 factors...

Nullchecking 5 factors...

Factor 4 removed, increasing objective by 5.586e+00.

Factor 5 removed, increasing objective by 8.135e-02.

Adding factor 7 to flash object...

Factor doesn't increase objective and won't be added.

Backfitting 6 factors...

Nullchecking 5 factors...

Wrapping up...

Done.p_res = plot_flash_loadings(flash_fit, n_per_pop)

print(p_res$p1)

print(p_res$p2)

print(flash_fit$objective)[1] -821415This is not very interpretable.

Fix Loadings Alternating Backfit

Here I fix the first loadings vector to the 1 vector and run a scheme where backfitting is performed after greedily adding each factor:

flash_fit = flashier::flashier(Y,

greedy.Kmax=K,

prior.type=c("nonnegative", "point.normal"),

ebnm.param=list(fixg=TRUE, g=list(pi0 = 0, a=1, mu=0)),

var.type=0,

fix.dim=list(1),

fix.idx=list(1:n),

fix.vals=list(rep(1, n)),

backfit = "alternating",

backfit.order = "dropout",

backfit.reltol = 10)Initializing flash object...

Adding factor 1 to flash object...

Adding factor 2 to flash object...

Backfitting 2 factors...

Adding factor 3 to flash object...

Backfitting 3 factors...

Adding factor 4 to flash object...

Backfitting 4 factors...

Adding factor 5 to flash object...

Factor doesn't increase objective and won't be added.

Nullchecking 3 factors...

Wrapping up...

Done.p_res = plot_flash_loadings(flash_fit, n_per_pop)

print(p_res$p1)

print(p_res$p2)

print(flash_fit$objective)[1] -821643.7This is not very interpretable.

FLASH

Here I run FLASH with no sign constraints on the loadings or factors:

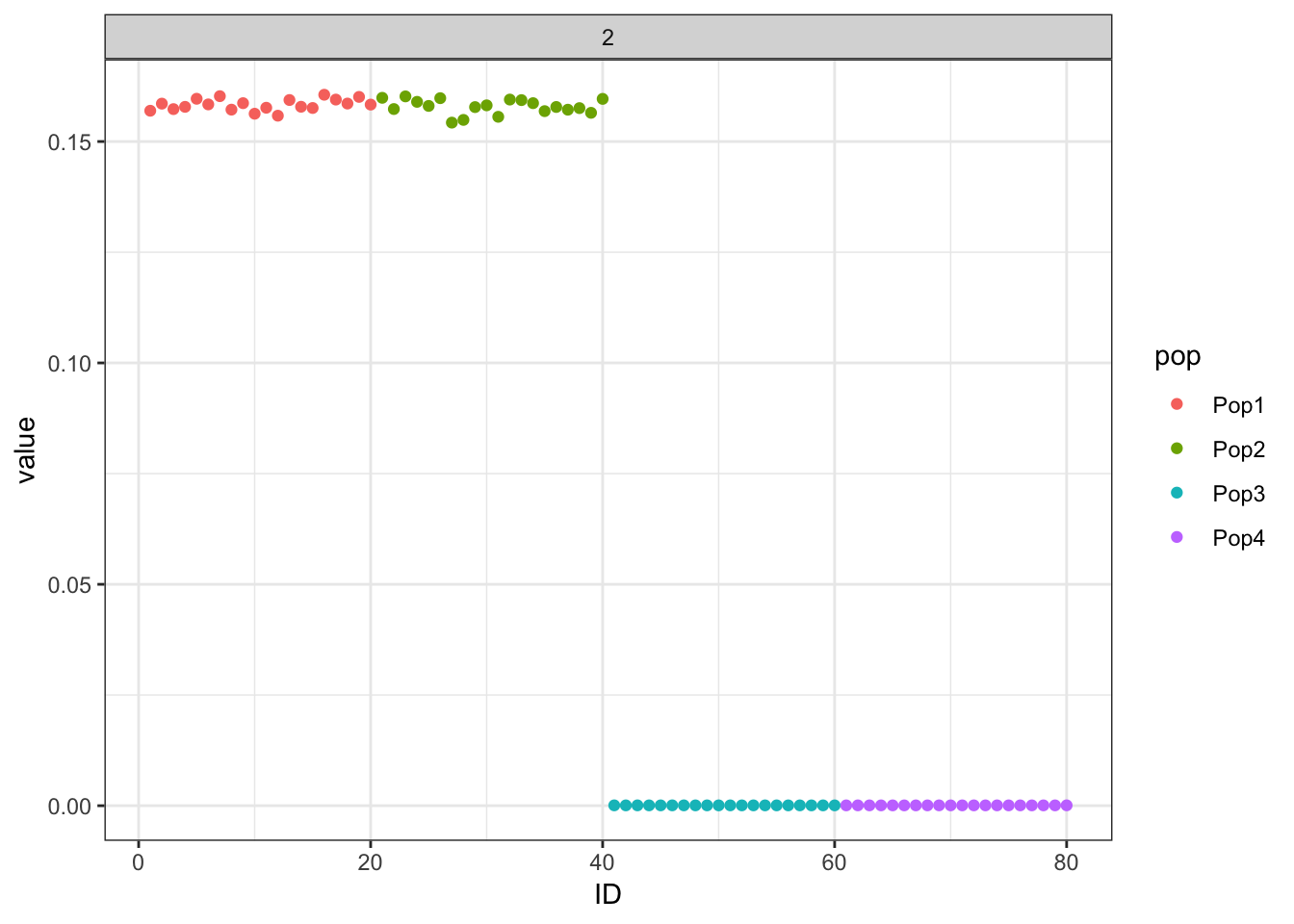

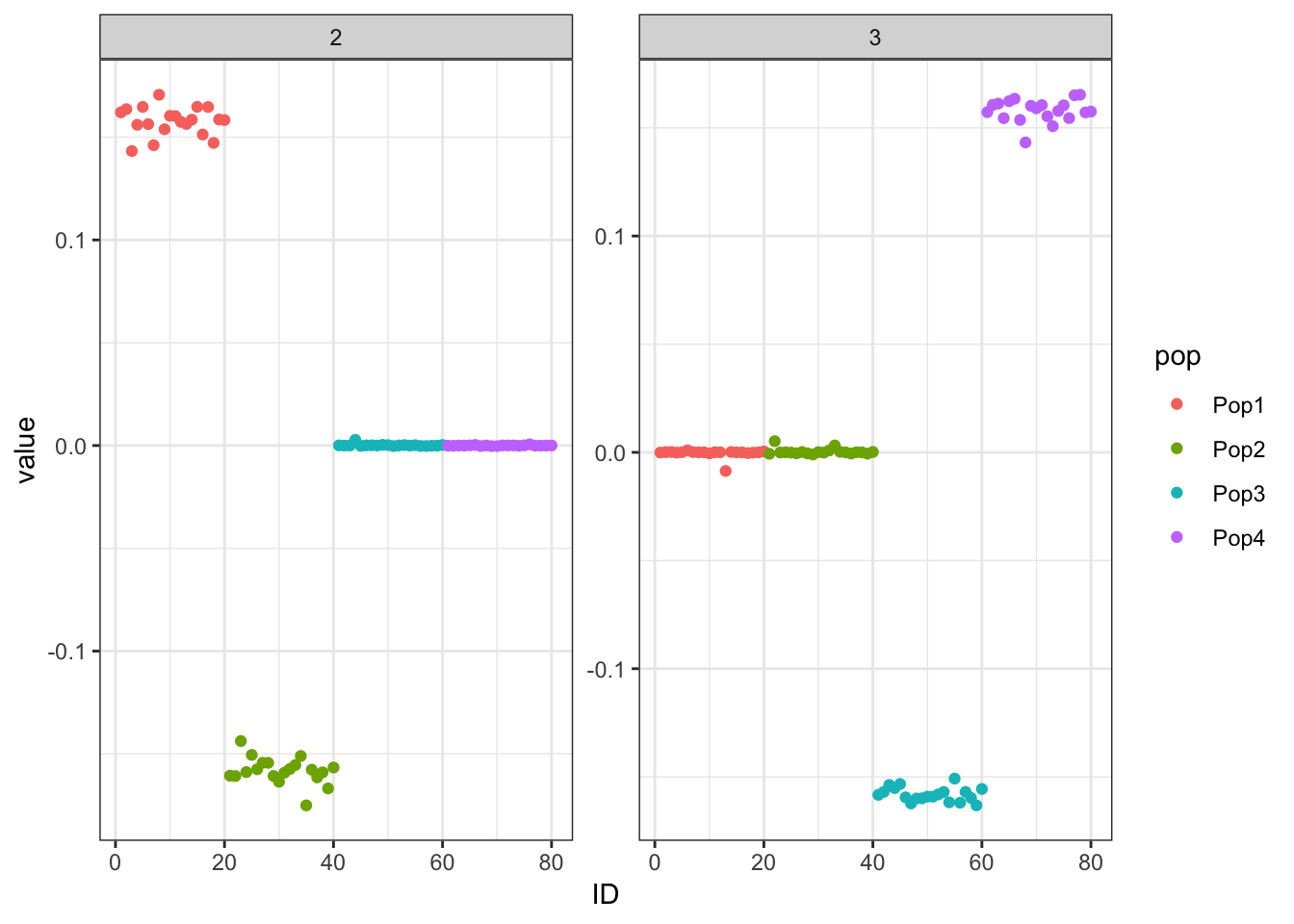

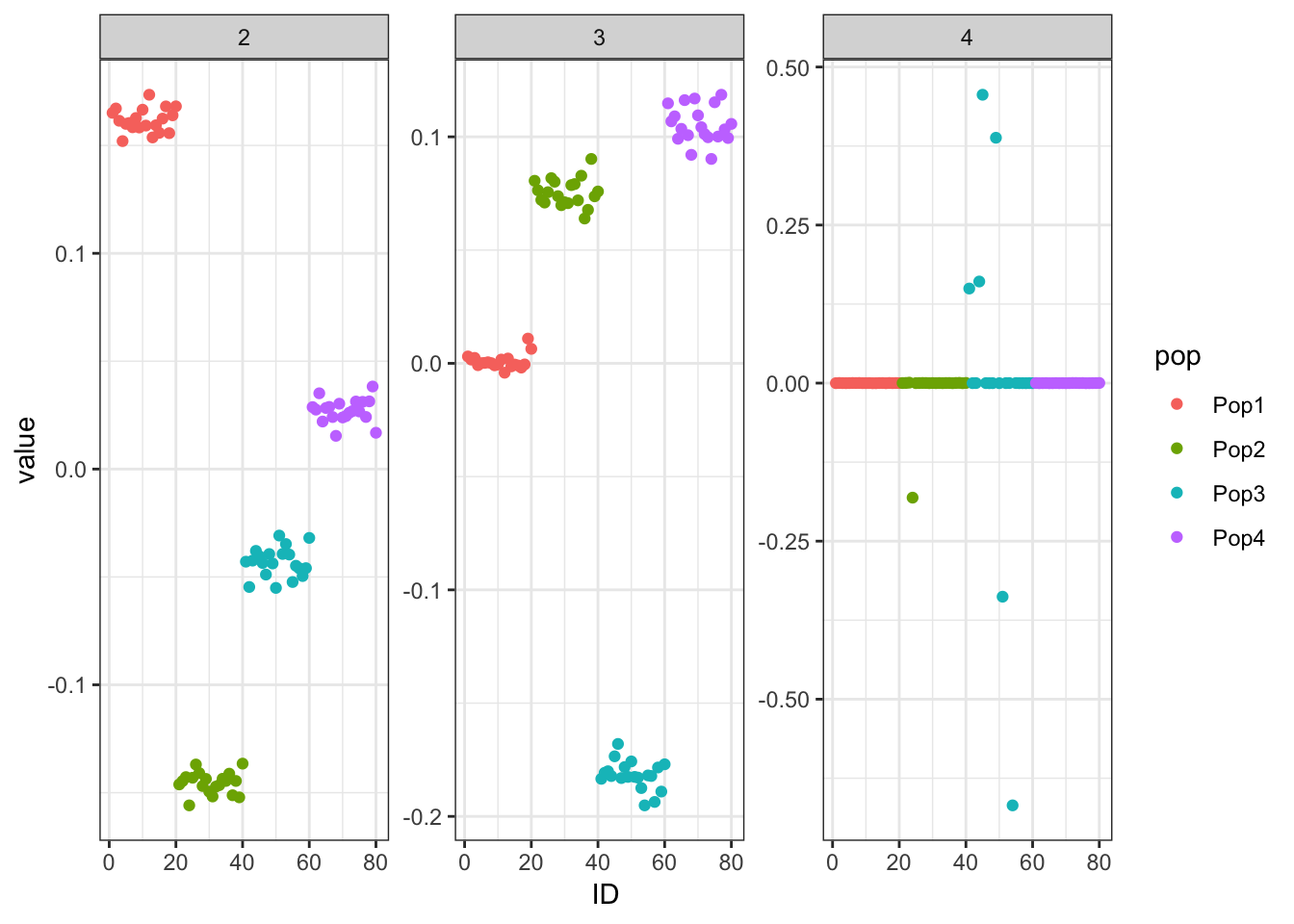

Mean Center Greedy

Here I mean center the data matrix before running greedy FLASH:

flash_fit = flashier::flashier(Y_c,

greedy.Kmax=K,

prior.type=c("normal.mixture", "point.normal"),

ebnm.param=list(fixg=TRUE, g=list(pi0 = 0, a=1, mu=0)),

var.type=0)Initializing flash object...

Adding factor 1 to flash object...

Adding factor 2 to flash object...

Adding factor 3 to flash object...

Adding factor 4 to flash object...

Factor doesn't increase objective and won't be added.

Nullchecking 3 factors...

Wrapping up...

Done.p_res = plot_flash_loadings(flash_fit, n_per_pop)

print(p_res$p1)

| Version | Author | Date |

|---|---|---|

| 2fcfa81 | jhmarcus | 2019-05-02 |

print(p_res$p2)

| Version | Author | Date |

|---|---|---|

| 2fcfa81 | jhmarcus | 2019-05-02 |

print(flash_fit$objective)[1] -782260.6Mean Center Alternating Backfit

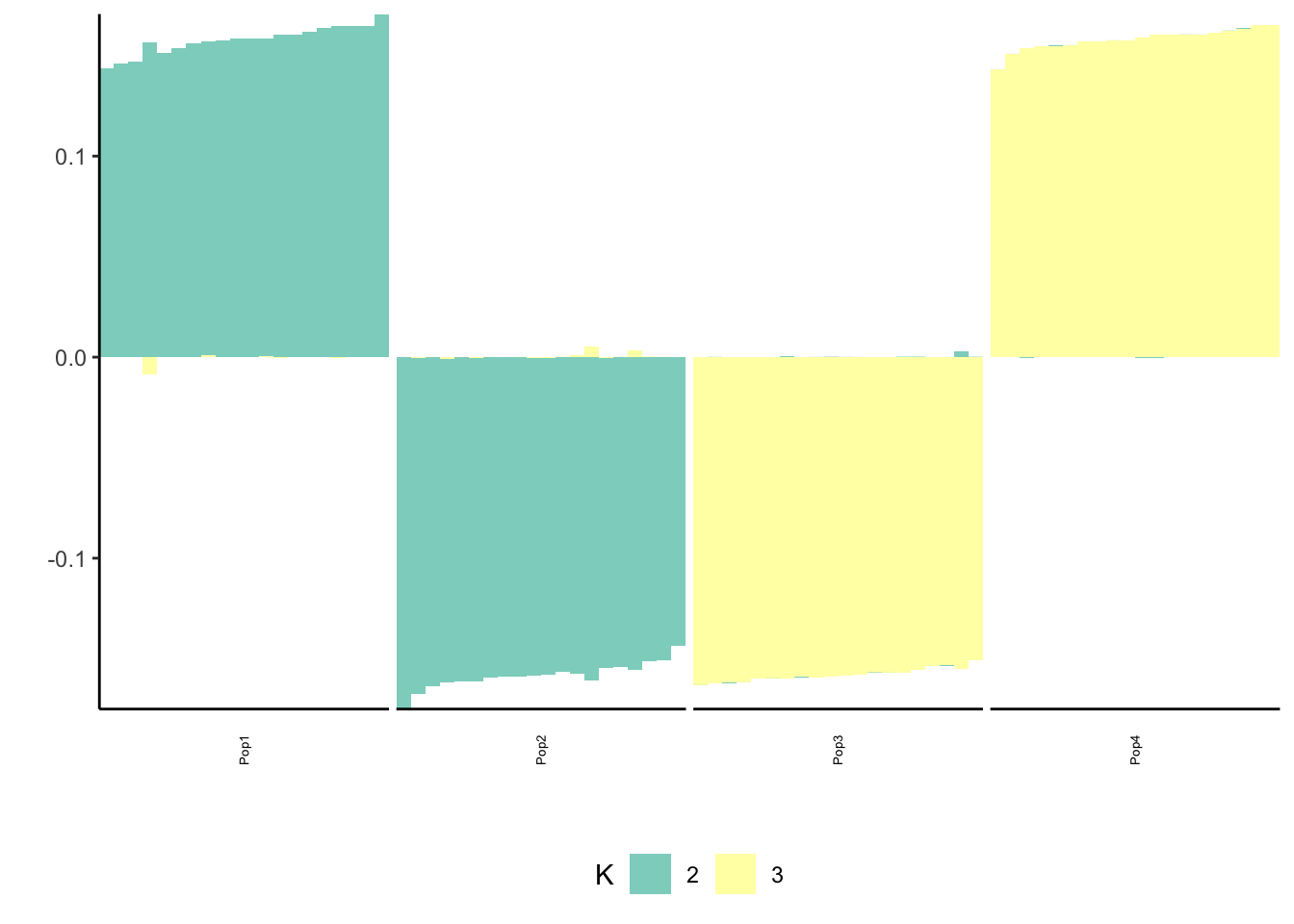

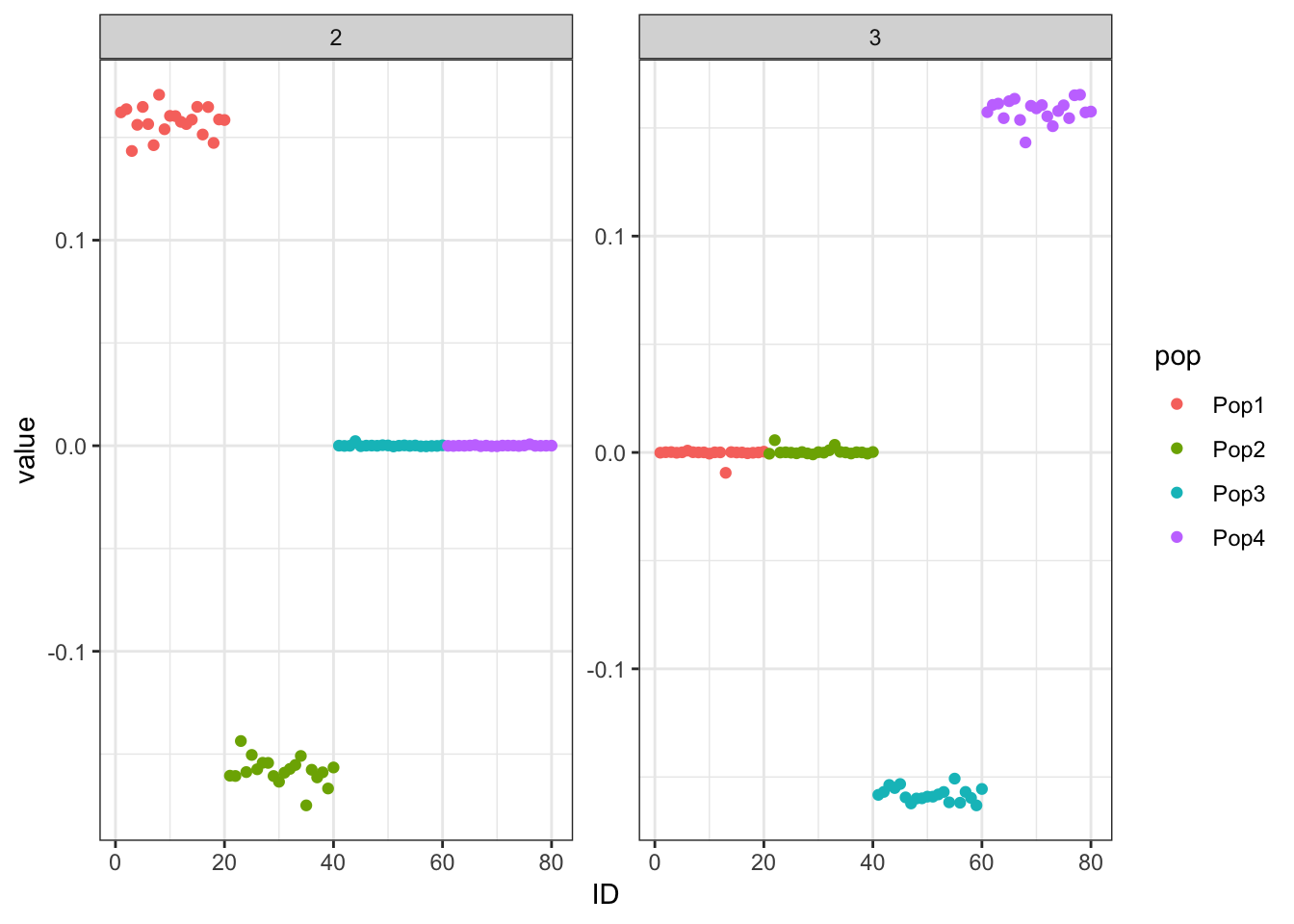

Here I mean center the data matrix before running greedy FLASH with alternating backfits:

flash_fit = flashier::flashier(Y_c,

greedy.Kmax=K,

prior.type=c("normal.mixture", "point.normal"),

ebnm.param=list(fixg=TRUE, g=list(pi0 = 0, a=1, mu=0)),

var.type=0,

backfit = "alternating",

backfit.order = "dropout",

backfit.reltol = 10)Initializing flash object...

Adding factor 1 to flash object...

Adding factor 2 to flash object...

Backfitting 2 factors...

Adding factor 3 to flash object...

Backfitting 3 factors...

Adding factor 4 to flash object...

Backfitting 4 factors...

Adding factor 5 to flash object...

Factor doesn't increase objective and won't be added.

Nullchecking 4 factors...

Wrapping up...

Done.p_res = plot_flash_loadings(flash_fit, n_per_pop)

print(p_res$p1)

| Version | Author | Date |

|---|---|---|

| 2fcfa81 | jhmarcus | 2019-05-02 |

print(p_res$p2)

| Version | Author | Date |

|---|---|---|

| 2fcfa81 | jhmarcus | 2019-05-02 |

print(flash_fit$objective)[1] -782233.1

sessionInfo()R version 3.5.1 (2018-07-02)

Platform: x86_64-apple-darwin13.4.0 (64-bit)

Running under: macOS 10.14.2

Matrix products: default

BLAS/LAPACK: /Users/jhmarcus/miniconda3/lib/R/lib/libRblas.dylib

locale:

[1] en_US.UTF-8/en_US.UTF-8/en_US.UTF-8/C/en_US.UTF-8/en_US.UTF-8

attached base packages:

[1] stats graphics grDevices utils datasets methods base

other attached packages:

[1] RColorBrewer_1.1-2 alstructure_0.1.0 flashier_0.1.1

[4] flashr_0.6-3 tidyr_0.8.2 dplyr_0.8.0.1

[7] ggplot2_3.1.0

loaded via a namespace (and not attached):

[1] softImpute_1.4 zoo_1.8-4 tidyselect_0.2.5

[4] xfun_0.4 ashr_2.2-37 purrr_0.3.0

[7] reshape2_1.4.3 lattice_0.20-38 gmm_1.6-2

[10] colorspace_1.4-0 htmltools_0.3.6 stats4_3.5.1

[13] yaml_2.2.0 rlang_0.3.1 mixsqp_0.1-115

[16] pillar_1.3.1 glue_1.3.0 withr_2.1.2

[19] foreach_1.4.4 plyr_1.8.4 stringr_1.4.0

[22] munsell_0.5.0 gtable_0.2.0 workflowr_1.2.0

[25] lfa_1.12.0 mvtnorm_1.0-10 codetools_0.2-16

[28] evaluate_0.12 labeling_0.3 knitr_1.21

[31] doParallel_1.0.14 pscl_1.5.2 parallel_3.5.1

[34] Rcpp_1.0.0 corpcor_1.6.9 scales_1.0.0

[37] backports_1.1.3 tmvtnorm_1.4-10 truncnorm_1.0-8

[40] fs_1.2.6 digest_0.6.18 stringi_1.2.4

[43] ebnm_0.1-17 grid_3.5.1 rprojroot_1.3-2

[46] tools_3.5.1 sandwich_2.5-1 magrittr_1.5

[49] lazyeval_0.2.1 tibble_2.0.1 crayon_1.3.4

[52] whisker_0.3-2 pkgconfig_2.0.2 MASS_7.3-51.1

[55] Matrix_1.2-15 SQUAREM_2017.10-1 assertthat_0.2.0

[58] rmarkdown_1.11 iterators_1.0.10 R6_2.4.0

[61] git2r_0.23.0 compiler_3.5.1